VisualBasic 机器学习,第一步:Q-Learning

机器学习玩贪吃蛇游戏。

引言

过去几周,谷歌 AlphaGO 引起了全世界的关注,一个人工神经网络展示了其卓越的能力,凭借深度学习技术,AlphaGO 甚至击败了世界上顶级的围棋棋手。

目前,让我们通过它的人工智能仍然只存在于电影中,因为我们的世界对人工智能程序来说过于复杂。视频游戏中的世界是现实世界的简化版本,我们无法为现实世界创建像人类一样的人工智能,但在游戏世界中创建玩家人工智能是可能的。

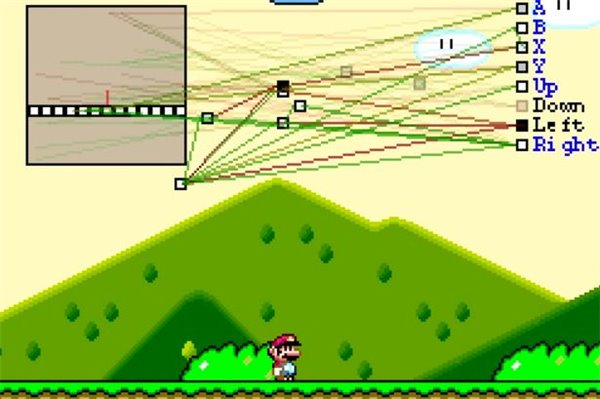

对我来说,最快乐的事情就是玩视频游戏,而马里奥游戏是我最喜欢的,因为 NES 游戏马里奥兄弟陪伴我度过了童年。Seth Bling 利用进化神经网络技术制作的马里奥游戏机玩家 MarI/O 启发我进入了机器学习领域。

MarI/O 视频:https://www.youtube.com/watch?v=qv6UVOQ0F44

受 MarI/O 伟大工作的启发,我决定开发我自己的 AI 引擎框架,不是为了严肃的研究,只是为了好玩和满足我的个人爱好。

Q-Learning 细节

Q-Learning 是一种无模型机器学习算法,对于像我这样的机器学习初学者来说,它是 ML 中最简单的算法。通常,Q-Learning 总共有三个步骤

阶段 1:输入环境状态

贪吃蛇如何看世界?

QTable 可以生成一个身份状态- 蛇与食物的相对位置描述

- 贪吃蛇当前的移动方向

QL_AI 来让贪吃蛇看到世界。' Get environment state as input

Dim pre = Distance(_snakeGame.Snake.Location, _snakeGame.food.Location)

Call _stat.SetState(_stat.GetNextState(Nothing))

-

Imports Microsoft.VisualBasic.DataMining.Framework.QLearning

Imports Microsoft.VisualBasic.GamePads.EngineParts

Imports Microsoft.VisualBasic.GamePads

''' <summary>

''' Environment state inputs

''' </summary>

Public Structure GameControl : Implements ICloneable

''' <summary>

''' The relative position between the head of the snake and his food

''' </summary>

Dim position As Controls

''' <summary>

''' The current movement direction of the snake.(蛇的当前运动方向)

''' </summary>

Dim moveDIR As Controls

''' <summary>

''' hash key for the QTable

''' </summary>

''' <returns></returns>

Public Overrides Function ToString() As String

Return $"{CInt(position)},{vbTab}{CInt(moveDIR)}"

End Function

Public Function Clone() As Object Implements ICloneable.Clone

Return New GameControl With {

.position = position,

.moveDIR = moveDIR

}

End Function

End Structure

Public Class QState : Inherits QState(Of GameControl)

Dim game As Snake.GameEngine

Sub New(game As Snake.GameEngine)

Me.game = game

End Sub

''' <summary>

''' The position relationship of the snake head and his food consists of

''' the current environment state

''' </summary>

''' <param name="action">当前的动作</param>

''' <returns></returns>

Public Overrides Function GetNextState(action As Integer) As GameControl

Dim pos As Controls = Position(game.Snake.Location, game.food.Location, False)

Dim stat = New GameControl With {

.moveDIR = game.Snake.Direction,

.position = pos

} ' 当前的动作加上当前的状态构成q-learn里面的一个状态

Return stat

End Function

End Class

阶段 2:输出控制

Q-Learning 的算法核心是 QTable,QTable 基本上由两个元素组成:环境状态和与特定环境状态相关的动作 Q 值。代码中动作状态对象中元素的索引用于动作,例如 0 代表向上动作,1 代表向下动作等。由于 Q-Learning 的动作输出只有 4 个方向控制:上、下、左 和 右,因此 QTable 的动作范围是 4。

QTable 的基础

Imports System

Imports System.Collections

Imports System.Collections.Generic

Imports Microsoft.VisualBasic.DataMining.Framework.QLearning.DataModel

Imports Microsoft.VisualBasic

Namespace QLearning

''' <summary>

''' The heart of the Q-learning algorithm, the QTable contains the table

''' which maps states, actions and their Q values. This class has elaborate

''' documentation, and should be the focus of the students' body of work

''' for the purposes of this tutorial.

'''

''' @author A.Liapis (Original author), A. Hartzen (2013 modifications);

''' xie.guigang@gcmodeller.org (2016 modifications)

''' </summary>

Public MustInherit Class QTable(Of T As ICloneable)

Implements IQTable

''' <summary>

''' The table variable stores the Q-table, where the state is saved

''' directly as the actual map. Each map state has an array of Q values

''' for all the actions available for that state.

''' </summary>

Public ReadOnly Property Table As Dictionary(Of Action) _

Implements IQTable.Table

''' <summary>

''' The actionRange variable determines the number of actions available

''' at any map state, and therefore the number of Q values in each entry

''' of the Q-table.

''' </summary>

Public ReadOnly Property ActionRange As Integer Implements IQTable.ActionRange

#Region "E-GREEDY Q-LEARNING SPECIFIC VARIABLES"

''' <summary>

''' For e-greedy Q-learning, when taking an action, a random number is

''' checked against the explorationChance variable: if the number is

''' below the explorationChance, then exploration takes place picking

''' an action at random. Note that the explorationChance is not a final

''' because it is customary that the exploration chance changes as the

''' training goes on.

''' </summary>

Public Property ExplorationChance As Single = 0.05F _

Implements IQTable.ExplorationChance

''' <summary>

''' The discount factor is saved as the gammaValue variable. The

''' discount factor determines the importance of future rewards.

''' If the gammaValue is 0, then the AI will only consider immediate

''' rewards, while with a gammaValue near 1 (but below 1), the AI will

''' try to maximize the long-term reward even if it is many moves away.

''' </summary>

Public Property GammaValue As Single = 0.9F Implements IQTable.GammaValue

''' <summary>

''' The learningRate determines how new information affects accumulated

''' information from previous instances. If the learningRate is 1, then

''' the new information completely overrides any previous information.

''' Note that the learningRate is not a final because it is

''' customary that the learningRate changes as the

''' training goes on.

''' </summary>

Public Property LearningRate As Single = 0.15F Implements IQTable.LearningRate

例如,在上面显示的代码截图上,QTable 类中的 Table 变量,其键是一个可以表示某种环境状态的 string,字典的值是动作的 Q 值。在这个贪吃蛇游戏中,字典中每个元素有四个值,其 Q 值元素索引代表操纵杆上的四个方向按钮,Q 值决定程序按哪个方向按钮是最佳动作。

在 QTable 的初始状态下,QTable 字典中没有元素,但随着游戏的持续进行,越来越多的环境状态将存储在 QTable 中,因此您可以将 QTable 视为一个人做某事的经验。

探索或经验

在这个 QL_AI 贪吃蛇游戏控制器程序中,我们使用 ε-贪婪方法算法来让程序选择如何处理当前环境状态:尝试新的探索或基于以前的经验的动作

''' <summary>

''' For this example, the getNextAction function uses an e-greedy

''' approach, having exploration happen if the exploration chance

''' is rolled.

''' ( **** 请注意,这个函数所返回的值为最佳选择的Index编号,所以可能还需要进行一些转换 **** )

''' </summary>

''' <param name="map"> current map (state) </param>

''' <returns> the action to be taken by the calling program </returns>

Public Overridable Function NextAction(map As T) As Integer

_prevState = CType(map.Clone(), T)

If __randomGenerator.NextDouble() < ExplorationChance Then

_prevAction = __explore()

Else

_prevAction = __getBestAction(map)

End If

Return _prevAction

End Function

定义最佳动作

如上所述,Q-Learning 的算法核心是应用于 QTable 对象的特定环境状态下动作的 Q 值(奖励和惩罚),因此我们应该定义一个动作对象来表示特定环境状态下的最佳动作

Imports Microsoft.VisualBasic.ComponentModel.Collection.Generic

Imports Microsoft.VisualBasic.Serialization

Namespace QLearning

''' <summary>

''' One specific environment state have some possible actions,

''' but there is just one best action on the current environment state based on the

''' accumulate q-values

''' </summary>

Public Class Action : Implements sIdEnumerable

''' <summary>

''' The environment variables state as inputs for the machine.

''' </summary>

''' <returns></returns>

Public Property EnvirState As String Implements sIdEnumerable.Identifier

''' <summary>

''' Actions for the current state.

''' </summary>

''' <returns></returns>

Public Property Qvalues As Single()

''' <summary>

''' Environment -> actions' Q-values

''' </summary>

''' <returns></returns>

Public Overrides Function ToString() As String

Return $"[ {EnvirState} ] {vbTab}--> {Qvalues.GetJson}"

End Function

End Class

End Namespace

对于上面定义的类代码,我们知道在当前环境状态 EnvirState 中,程序有一些动作选择,动作编码为 Qvalues 数组中的索引,Qvalues 属性中的数组元素表示当前状态 EnvirState 下的动作奖励,Qvalues 中元素值越高意味着当前状态下动作奖励越高,因此我们只让程序返回 Qvalues 中最大值的索引,并且该索引可以解码为最佳动作。获取当前状态下的最佳动作就像下面的函数动作一样

''' <summary>

''' The getBestAction function uses a greedy approach for finding

''' the best action to take. Note that if all Q values for the current

''' state are equal (such as all 0 if the state has never been visited

''' before), then getBestAction will always choose the same action.

''' If such an action is invalid, this may lead to a deadlock as the

''' map state never changes: for situations like these, exploration

''' can get the algorithm out of this deadlock.

''' </summary>

''' <param name="map"> current map (state) </param>

''' <returns> the action with the highest Q value </returns>

Private Function __getBestAction(map As T) As Integer

Dim rewards() As Single = Me.__getActionsQValues(map)

Dim maxRewards As Single = Single.NegativeInfinity

Dim indexMaxRewards As Integer = 0

For i As Integer = 0 To rewards.Length - 1

' Gets the max element value its index in the Qvalues

If maxRewards < rewards(i) Then

maxRewards = rewards(i)

indexMaxRewards = i

End If

Next i

' decode this index value as the action controls

Return indexMaxRewards

End Function

在这个 snake 游戏中,程序的操纵杆上只有四个方向键,因此 Action 类中的 Qvalues 属性有四个元素,代表机器程序按下的每个方向按钮的 Q 值。

Qvalues 中的四个元素代表每个动作的 Q 值。

在最佳动作之后,其索引根据当前环境状态输入从 Qtable 返回,我们可以将动作索引解码为贪吃蛇的操纵杆控制

Dim index As Integer = Q.NextAction(_stat.Current)

Dim action As Controls

Select Case index

Case 0

action = Controls.Up

Case 1

action = Controls.Down

Case 2

action = Controls.Left

Case 3

action = Controls.Right

Case Else

action = Controls.NotBind

End Select

Call _snakeGame.Invoke(action)

因此我们可以解释程序如何在当前环境状态下采取行动

如果随机数在 ExplorationChance 的范围内,程序将采取随机行动来探索它的新世界。

如果不是,则程序将根据当前环境状态和 QTable 中的历史记录做出最佳动作决策,也就是说,它根据经验采取行动。

阶段 3:反馈(学习或适应)

如您在上面显示的 __getBestAction 函数中所示,首先,程序从当前环境状态获取 Q 值,然后从比较中,程序可以选择一个可以最大化该方向奖励的动作索引。

学习第一步:看世界

为了为程序生成做某事的经验,实现了一个字典 add 方法,用于将新的环境状态添加到 QTable,从而使程序能够学习新世界。

''' <summary>

''' This helper function is used for entering the map state into the

''' HashMap </summary>

''' <param name="map"> </param>

''' <returns> String used as a key for the HashMap </returns>

Protected MustOverride Function __getMapString(map As T) As String

''' <summary>

''' The getActionsQValues function returns an array of Q values for

''' all the actions available at any state. Note that if the current

''' map state does not already exist in the Q table (never visited

''' before), then it is initiated with Q values of 0 for all of the

''' available actions.

''' </summary>

''' <param name="map"> current map (state) </param>

''' <returns> an array of Q values for all the actions available at any state </returns>

Private Function __getActionsQValues(map As T) As Single()

Dim actions() As Single = GetValues(map)

If actions Is Nothing Then ' 还不存在这个动作,则添加新的动作

Dim initialActions(ActionRange - 1) As Single

For i As Integer = 0 To ActionRange - 1

initialActions(i) = 0.0F

Next i

' If the current environment state is not in the program's memory,

' then store it, this is the so called learn

_Table += New Action With {

.EnvirState = __getMapString(map),

.Qvalues = initialActions

}

Return initialActions

End If

Return actions

End Function

学习第二步:改进经验

为了教导孩子,当孩子按照我们期望的方式行事时,我们通常会给予一些奖励,所以在 Q-Learning 中也是如此,我们使用奖励来改进程序在特定环境状态下动作的经验

''' <summary>

''' The updateQvalue is the heart of the Q-learning algorithm. Based on

''' the reward gained by taking the action prevAction while being in the

''' state prevState, the updateQvalue must update the Q value of that

''' {prevState, prevAction} entry in the Q table. In order to do that,

''' the Q value of the best action of the current map state must also

''' be calculated.

''' </summary>

''' <param name="reward"> at the current map state </param>

''' <param name="map"> current map state (for finding the best action of the

''' current map state) </param>

Public Overridable Sub UpdateQvalue(reward As Integer, map As T)

Dim preVal() As Single = Me.__getActionsQValues(Me._prevState)

preVal(Me._prevAction) += Me.LearningRate * (reward + Me.GammaValue * _

Me.__getActionsQValues(map)(Me.__getBestAction(map)) - preVal(Me._prevAction))

End Sub

Update Q 值函数可以表示为以下公式

其中 Q 是上一个环境状态下动作的 Q 值,T 是上一个环境状态的最佳动作。R 代表学习率,E 是当前环境状态。

如果程序的动作使其接近我们的目标,那么我们用奖励更新动作的 Q 值,如果不是,那么我们用惩罚分数更新动作的 Q 值,这样经过几次训练循环后,程序将按照我们期望的方式工作。Q-Learning 就像狗的经典条件反射一样。

运行示例

为了节省您运行此示例的时间,您可以从此链接下载经过训练的贪吃蛇 QL_AI QTable 数据

并使用以下命令启动带有训练数据的贪吃蛇

snakeAI /start /load "filepath-to-the-download-dataset"

否则,您也可以重新训练贪吃蛇。从头开始训练贪吃蛇,只需双击贪吃蛇应用程序,它将以全新的状态开始运行。

本文只是试图从最简单的贪吃蛇游戏来解释机器学习的基本概念,我希望您能从中获得灵感,构建一个更强大的 AI 程序,能够处理更复杂的环境情况。

希望您能享受!;-)