Silverlight 5 和 NSpeex:从捕获源捕获音频,编码,保存到文件,并解码播放

5.00/5 (3投票s)

本文使用 NSpeex 作为编解码器,从 Silverlight 中的捕获源(例如,计算机麦克风)编码/解码音频。

引言

我有一个应用程序的想法,其要求是捕获音频,将其保存到磁盘,稍后播放,所有这些都使用 Silverlight。 我还需要保存在磁盘上的捕获音频文件尽可能小。

在 Silverlight 中访问原始音频样本的可能性已经存在一段时间了,但准备和将原始字节写入磁盘是您必须自己处理的任务。 通过使用 AudioCaptureDevice 连接到可用的音频源(例如,麦克风等)并使用 System.Windows.Media 命名空间中的 AudioSink 类来访问原始样本,这是可能的。

现在,考虑到我的意图不仅仅是捕获和访问原始数据,我还需要写入文件并进行压缩。 我不知道该使用什么,考虑到我希望 Silverlight 中具有此功能。

NSpeex (http://nspeex.codeplex.com/) 来拯救 - 这是在 Code Project 上快速搜索之后 (见本文的讨论部分: https://codeproject.org.cn/Articles/20093/Speex-in-C?msg=3660549#xx3660549xx)。

不幸的是,该网站上没有太多文档,因此我很难弄清楚整个编码/解码过程。

这就是我如何做到的,我希望它能帮助那些需要处理类似应用程序的人。

准备工作

- 访问

CaptureDevices需要提升的信任... - 写入磁盘需要您启用在浏览器之外运行应用程序

- 编码原始样本需要您编写从

AudioSink派生的音频捕获类 - 解码为原始样本需要您编写一个从

MediaStreamSource派生的自定义流源类

我们的音频捕获类必须重写从 AudioSink 抽象类派生的一些方法。 这些是 OnCaptureStarted(), OnCaptureStopped(), OnFormatChange() 和 OnSamples()。

至于 MediaStreamSource,您可能希望参考我之前的文章,该文章总结了要重写的方法 - https://codeproject.org.cn/Articles/251874/Play-AVI-files-in-Silverlight-5-using-MediaElement

使用代码

- 创建一个新的 Silverlight 5 项目 (C#),并为其指定您想要的任何名称

- 按照我之前的文章中的说明设置项目属性 - https://codeproject.org.cn/Articles/251874/Play-AVI-files-in-Silverlight-5-using-MediaElement

- 打开名为 MainPage.xaml 的默认创建的 UserControl

- 将 XAML 代码更改为类似于以下代码,其中包括以下控件

MediaElement:请求音频样本- 四个按钮:开始/停止录制,开始/停止播放音频

- 滑块:调整编码期间的音频质量

TextBoxes:显示录制/播放音频时长,录制限制(分钟)ComboBox:捕获设备列表- 两个

DataGrids:列出音频格式并显示已保存的音频文件详细信息

<Grid x:Name="LayoutRoot" Background="White">

<Grid.RowDefinitions>

<RowDefinition Height="56"/>

<RowDefinition Height="344*" />

</Grid.RowDefinitions>

<MediaElement AutoPlay="True"

Margin="539,8,0,0" x:Name="MediaPlayer"

Stretch="Uniform" Height="20" Width="47"

VerticalAlignment="Top" HorizontalAlignment="Left"

MediaOpened="MediaPlayer_MediaOpened" MediaEnded="MediaPlayer_MediaEnded" />

<Button Content="Start Rec" Height="23" HorizontalAlignment="Left" Margin="12,23,0,0"

Name="StartRecording" VerticalAlignment="Top" Width="64" Click="StartRecording_Click" />

<Button Content="Stop Rec" Height="23" HorizontalAlignment="Left" Margin="82,23,0,0"

Name="StopRecording" VerticalAlignment="Top" Width="59" Click="StopRecording_Click" IsEnabled="False" />

<Button Content="Start Play" Height="23" HorizontalAlignment="Right" Margin="0,23,77,0"

Name="StartPlaying" VerticalAlignment="Top" Width="64" Click="StartPlaying_Click" />

<Button Content="Stop" Height="23" HorizontalAlignment="Right" Margin="0,23,12,0"

Name="StopPlaying" VerticalAlignment="Top" Width="59" Click="StopPlaying_Click" IsEnabled="False" />

<!--<Button Content="Button" Height="23" HorizontalAlignment="Left" Margin="592,8,0,0"

Name="button1" VerticalAlignment="Top" Width="60"

Click="button1_Click" Visibility="Visible" IsEnabled="False" />-->

<Slider Name="Quality" Value="{Binding QualityValue, Mode=TwoWay}" Height="23" Width="129"

HorizontalAlignment="Left" VerticalAlignment="Top"

Margin="342,23,0,0"

Minimum="2" Maximum="7" SmallChange="1" DataContext="{Binding}" />

<TextBox Text="{Binding timeSpan, Mode=OneWay}" Margin="147,23,0,0" TextAlignment="Center"

HorizontalAlignment="Left" Width="85" IsEnabled="False" Height="23" VerticalAlignment="Top" />

<TextBlock Text="/" Margin="0,3,104,0" HorizontalAlignment="Right" Width="25" Height="38"

VerticalAlignment="Top" FontSize="24" TextAlignment="Center"

FontWeight="Normal" Foreground="#FF8D8787" Grid.Row="1" />

<TextBox Text="{Binding RecLimit, Mode=TwoWay}" Margin="250,23,0,0"

TextAlignment="Center" HorizontalAlignment="Left" Width="43" Height="23" VerticalAlignment="Top" />

<TextBox Text="{Binding QualityValue, Mode=TwoWay}" Margin="477,23,0,0"

TextAlignment="Center" HorizontalAlignment="Left" Width="47"

IsEnabled="False" Height="23" VerticalAlignment="Top" />

<TextBox Text="{Binding timeSpan, Mode=OneWay}" Margin="0,5,125,0" TextAlignment="Center"

IsEnabled="False" FontSize="24" FontWeight="Bold" FontFamily="Arial" BorderBrush="{x:Null}"

Height="33" VerticalAlignment="Top" HorizontalAlignment="Right" Width="107"

Grid.Row="1" Background="Black" Foreground="#FFADABAB" />

<TextBlock Text="/" Margin="229,25,0,0" HorizontalAlignment="Left" Width="25" Height="21"

VerticalAlignment="Top" FontSize="13" TextAlignment="Center" FontWeight="Normal" Foreground="#FF8D8787" />

<TextBox Text="{Binding PlayTime, Mode=OneWay}" Margin="0,5,4,0" TextAlignment="Center"

IsEnabled="False" FontSize="24" FontWeight="Bold" FontFamily="Arial" BorderBrush="{x:Null}"

Height="33" VerticalAlignment="Top" Grid.Row="1" HorizontalAlignment="Right"

Width="107" Foreground="#FFADABAB" Background="#FF101010" />

<TextBlock Text="Minutes" Margin="295,29,0,0"

HorizontalAlignment="Left" Width="47" Height="15" VerticalAlignment="Top" />

<TextBlock Text="Quality" Margin="340,8,0,0" TextAlignment="Center" FontWeight="Bold"

HorizontalAlignment="Left" Width="50" Height="15" VerticalAlignment="Top" />

<TextBlock Text="Limit" Margin="253,8,0,0" TextAlignment="Center"

FontWeight="Bold" HorizontalAlignment="Left" Width="36" Height="15" VerticalAlignment="Top" />

<TextBlock Text="Recorded Files" Margin="0,19,238,0" TextAlignment="Left"

FontWeight="Bold" HorizontalAlignment="Right" Width="126"

Height="15" VerticalAlignment="Top" Grid.Row="1" />

<ComboBox

x:Name="comboDeviceType" ItemsSource="{Binding AudioDevices, Mode=OneWay}"

DisplayMemberPath="FriendlyName" SelectedIndex="0" Height="21" VerticalAlignment="Top"

HorizontalAlignment="Left" Width="429" Margin="0,13,0,0" Grid.Row="1" />

<dg:DataGrid x:Name="RecordedFiles" SelectedIndex="0"

ItemsSource="{Binding FileItems, Mode=OneWay}" Grid.Row="1"

AutoGenerateColumns="False" Margin="430,40,0,0"

SelectionChanged="RecordedFiles_SelectionChanged">

<dg:DataGrid.Columns>

<dg:DataGridTextColumn Width="209" Header="File Path" Binding="{Binding FilePath}" />

<dg:DataGridTextColumn Header="File Size" Binding="{Binding FileSize}" />

<dg:DataGridTextColumn Header="Duration" Binding="{Binding FileDuration}" />

</dg:DataGrid.Columns>

</dg:DataGrid>

<dg:DataGrid x:Name="SourceDetailsFormats" SelectedIndex="0" Grid.Row="1"

ItemsSource="{Binding ElementName=comboDeviceType,Path=SelectedValue.SupportedFormats}"

AutoGenerateColumns="False" HorizontalAlignment="Left" Width="429" Margin="0,40,0,0">

<dg:DataGrid.Columns>

<dg:DataGridTextColumn Header="Wave Format" Binding="{Binding WaveFormat}" />

<dg:DataGridTextColumn Header="Channels" Binding="{Binding Channels}" />

<dg:DataGridTextColumn Header="Bits Per Sample" Binding="{Binding BitsPerSample}" />

<dg:DataGridTextColumn Header="Samples Per Second" Binding="{Binding SamplesPerSecond}" />

</dg:DataGrid.Columns>

</dg:DataGrid>

</Grid>

我们现在已经创建了 UI,我们肯定需要编写 UI 控件的后台代码来响应。

但首先,让我们编写我们需要的类,用于从捕获设备捕获音频,另一个用于响应 MediaElement 在播放期间对音频样本的请求

- 确保从 http://nspeex.codeplex.com 下载 NSpeex 库,并将引用添加到 NSpeex.Silverlight DLL

- 创建一个从

AudioSink派生的自定义类(我将其命名为 MemoryAudioSink.cs) - 创建一个从

MediaStreamSource派生的自定义类(我将其命名为 CustomSourcePlayer.cs)

//

// MemoryAudioSink.cs

//

using System;

using System.Windows.Media;

using System.IO;

using NSpeex; // <--- you need this

namespace myAudio_23._12._212.Audio

{

public class MemoryAudioSink : AudioSink //, INotifyPropertyChanged

{

// declaration of variables ...

// ... see attached code ...

protected override void OnCaptureStarted()

{

// set the encoder quality to the value selected

encoder.Quality = _quality;

// reserve 2 bytes to hold the duration of the recorded audio

// this is updated at the end of the recording

writer.Write(BitConverter.GetBytes(timeSpan));

// initialize the start time

recTime = DateTime.Now;

// initialize the recording duration

_timeSpan = 0;// "00:00:00";

}

protected override void OnCaptureStopped()

{

// We now need to update the encoded file with how long the recording took

// move the pointer to the beginning of the byte array

writer.Seek(0, SeekOrigin.Begin);

// now update to the duration of audio captured and close the BinaryWriter

writer.Write(BitConverter.GetBytes(timeSpan));

writer.Close();

}

protected override void OnFormatChange(AudioFormat audioFormat)

{

if (audioFormat.WaveFormat != WaveFormatType.Pcm)

throw new InvalidOperationException("MemoryAudioSink supports only PCM audio format.");

}

protected override void OnSamples(long sampleTimeInHundredNanoseconds,

long sampleDurationInHundredNanoseconds, byte[] sampleData)

{

// increment the number of samples captured by 1

numSamples++;

// New audio data arrived, so encode them.

var encodedAudio = EncodeAudio(sampleData);

// keep track of the duration of recording

// the final one will be used in the OnCaptureStopped

// NOTE: we could have calculated at the time

// of OnCaptureStopped but this is used by the UI code behind

// for displaying the recording time

_timeSpan = (Int32)(DateTime.Now.Subtract(recTime).TotalSeconds);

try

{

// get the length of the encoded Audio

encodedByteSize = (Int16)encodedAudio.Length;

// get bytes of the size of encoded Sample and write it to file

// before writing the bytes of the encoded Sample, we first write its size

writer.Write(BitConverter.GetBytes(encodedByteSize));

// now write the encoded Bytes to file

writer.Write(encodedAudio);

}

catch (Exception ex)

{

// Do something about the exception

}

}

public byte[] EncodeAudio(byte[] rawData)

{

// NSpeex encoder expects as input data contained in variable of short data type array

// hence we devide the length by 2 because short holds 2 bytes

var inDataSize = rawData.Length / 2;

// create array to hold the raw data

var inData = new short[inDataSize];

// transfer the raw data 2 bytes at a time

for (int index = 0; index < rawData.Length; index +=2 )

{

inData[index / 2] = BitConverter.ToInt16(rawData, index);

}

// raw data size should be multiple of the encoder frameSize i.e. 320

inDataSize = inDataSize - inDataSize % encoder.FrameSize;

// run the encoder

var encodedData = new byte[rawData.Length];

var encodedBytes = encoder.Encode(inData, 0, inDataSize, encodedData, 0, encodedData.Length);

// declare and initialize encoded Audio data array

byte[] encodedAudioData = null;

if (encodedBytes != 0)

{

// reinitialize the array to the size of the encodedData

encodedAudioData = new byte[encodedBytes];

// now copy the encoded bytes to our new array

Array.Copy(encodedData, 0, encodedAudioData, 0, encodedBytes);

}

return encodedAudioData;

}

}

}

using System;

using System.Windows.Media;

using System.Collections.Generic;

using System.IO;

using System.Globalization;

using NSpeex; // <<--- you need this

namespace myAudio_23._12._212

{

public class CustomSourcePlayer : MediaStreamSource

{

// declaration of variables ...

// ... see attached code ...

protected override void OpenMediaAsync()

{

playTime = DateTime.Now;

_timeSpan = "00:00:00";

Dictionary<MediaSourceAttributesKeys, string> sourceAttributes =

new Dictionary<MediaSourceAttributesKeys, string>();

List<MediaStreamDescription> availableStreams = new List<MediaStreamDescription>();

_audioDesc = PrepareAudio();

availableStreams.Add(_audioDesc);

sourceAttributes[MediaSourceAttributesKeys.Duration] =

TimeSpan.FromSeconds(0).Ticks.ToString(CultureInfo.InvariantCulture);

sourceAttributes[MediaSourceAttributesKeys.CanSeek] = false.ToString();

ReportOpenMediaCompleted(sourceAttributes, availableStreams);

}

private MediaStreamDescription PrepareAudio()

{

int ByteRate = _audioSampleRate * _audioChannels * (_audioBitsPerSample / 8);

// I think I got this WaveFormatEx Class from http://10rem.net/

// reference: http://msdn.microsoft.com/en-us/library/aa908934.aspx

_waveFormat = new WaveFormatEx();

_waveFormat.BitsPerSample = _audioBitsPerSample;

_waveFormat.AvgBytesPerSec = (int)ByteRate;

_waveFormat.Channels = _audioChannels;

_waveFormat.BlockAlign = (short)(_audioChannels * (_audioBitsPerSample / 8));

_waveFormat.ext = null;

_waveFormat.FormatTag = WaveFormatEx.FormatPCM;

_waveFormat.SamplesPerSec = _audioSampleRate;

_waveFormat.Size = 0;

// reference: http://msdn.microsoft.com/en-us/library/system.windows.media.mediastreamattributekeys(v=vs.95).aspx

Dictionary<MediaStreamAttributeKeys, string> streamAttributes = new Dictionary<MediaStreamAttributeKeys, string>();

streamAttributes[MediaStreamAttributeKeys.CodecPrivateData] = _waveFormat.ToHexString();

// reference: http://msdn.microsoft.com/en-us/library/system.windows.media.mediastreamdescription(v=vs.95).aspx

return new MediaStreamDescription(MediaStreamType.Audio, streamAttributes);

}

protected override void CloseMedia()

{

cleanup();

}

public void cleanup()

{

reader.Close();

}

// this method is called by MediaElement everytime a request for sample is made

protected override void GetSampleAsync(

MediaStreamType mediaStreamType)

{

//if (mediaStreamType == MediaStreamType.Audio)

GetAudioSample();

}

private void GetAudioSample()

{

MediaStreamSample msSamp;

if (reader.BaseStream.Position < reader.BaseStream.Length)

{

encodedSamples = BitConverter.ToInt16(reader.ReadBytes(2), 0);

byte[] decodedAudio = Decode(reader.ReadBytes(encodedSamples));

//encodedSamples = 8426;

memStream = new MemoryStream(decodedAudio);

//MediaStreamSample

msSamp = new MediaStreamSample(_audioDesc, memStream, _audioStreamOffset, memStream.Length,

_currentAudioTimeStamp, _emptySampleDict);

_currentAudioTimeStamp += _waveFormat.AudioDurationFromBufferSize((uint)memStream.Length);

}

else

{

// Report end of stream

msSamp = new MediaStreamSample(_audioDesc, null, 0, 0, 0, _emptySampleDict);

}

ReportGetSampleCompleted(msSamp);

// calculate how long the audio has played so far

_timeSpan = TimeSpan.FromSeconds((Int32)(DateTime.Now.Subtract(playTime).TotalSeconds)).ToString();

}

public byte[] Decode(byte[] encodedData)

{

try

{

// should be the same number of samples as on the capturing side

short[] decodedFrame = new short[44100];

int decodedBytes = decoder.Decode(encodedData, 0, encodedData.Length, decodedFrame, 0, false);

byte[] decodedAudioData = null;

decodedAudioData = new byte[decodedBytes * 2];

try

{

for (int shortIndex = 0, byteIndex = 0; shortIndex < decodedBytes; shortIndex++, byteIndex += 2)

{

byte[] temp = BitConverter.GetBytes(decodedFrame[shortIndex]);

decodedAudioData[byteIndex] = temp[0];

decodedAudioData[byteIndex + 1] = temp[1];

}

}

catch (Exception ex)

{

return decodedAudioData;

}

// to do: with decoded data

return decodedAudioData;

}

catch (Exception ex)

{

//System.Diagnostics.Debug.WriteLine(ex.Message.ToString());

return null;

}

}

}

}

创建自定义类后,我们需要在 UI 中使用后台代码将它们连接起来...

//

// MainPage.xaml.cs

//

using System;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Media;

using System.IO;

using System.Collections;

using System.ComponentModel;

using System.Windows.Threading;

using System.Collections.ObjectModel;

using myAudio_23._12._212.Audio;

using System.Runtime.Serialization;

namespace myAudio_23._12._212

{

public partial class MainPage : UserControl, INotifyPropertyChanged

{

// declaration of variables ...

// ... see attached code ...

public MainPage()

{

InitializeComponent();

this.Loaded += (s, e) =>

{

this.DataContext = this;

// initialize the timer

this.timer = new DispatcherTimer()

{

Interval = TimeSpan.FromMilliseconds(200)

};

this.timer.Tick += new EventHandler(timer_Tick);

// I have named the serialized object file to recordedAudio.s2x

path = System.IO.Path.Combine(Environment.GetFolderPath(

Environment.SpecialFolder.MyDocuments), "recordedAudio.s2x");

if (reader != null)

reader.Close();

// First check if the file exists

if (File.Exists(path))

{

// open file for reading

reader = new BinaryReader(File.Open(path, FileMode.Open));

// I had decided to reserve first 4 bytes of the file to contain the total bytes

// that represents the serialized FileList object.

// *** I cannot remember why I decided to do that *****

// so here we extract the value from the 4 bytes ...

Int32 fSize = BitConverter.ToInt32(reader.ReadBytes(4), 0);

// ... and then extract the bytes representing the object to be deserialized

byte[] b = reader.ReadBytes(fSize);

// Now, declare and instantiate the object ...

FileList fL = new FileList(FileItems);

// ... deserialize the bytes back to its original form

fL = (FileList)WriteFromByte(b);

// This assignment will cause the NotifyPropertyChanged("FileItems") to be triggered

// and hence update the UI

FileItems = fL._files;

reader.Close();

}

// Make the first item in the RecordedFiles DataGrid selected

RecordedFiles.SelectedIndex = 0;

};

}

/// <summary>

/// This timer is started/stopped during recording/playing

/// If recording, it also keeps track of recording time limit in minutes

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

void timer_Tick(object sender, EventArgs e)

{

if (this._sink != null)

{

if (this._sink.timeSpan <= _recLimit * 60)

{

timeSpan = TimeSpan.FromSeconds(this._sink.timeSpan).ToString();

PlayTime = timeSpan;

}

else

StopRec();

}

else

{

timeSpan = this._mediaSource.timeSpan;

}

}

/// <summary>

/// checks to see if the user is allowed to access the capture devices

/// </summary>

/// <returns>True/False</returns>

private bool EnsureAudioAccess()

{

return CaptureDeviceConfiguration.AllowedDeviceAccess ||

CaptureDeviceConfiguration.RequestDeviceAccess();

}

private void StartRecording_Click(object sender, RoutedEventArgs e)

{

// check to see if user has permission to access capture devices

// if not do nothing - you may wish to display a message box

if (!EnsureAudioAccess())

return;

// initialize the new recording file name

// at least ensures unique name

long fileName = DateTime.Now.Ticks;

// get the path to where the file will be saved

// you may decide to display a file dialogue box for user to select since it is running

// out of browser

path = System.IO.Path.Combine(Environment.GetFolderPath(

Environment.SpecialFolder.MyDocuments), fileName.ToString()+".spx");

// this is obvious checking if the file already exists

// you may wish to be more careful not to just delete the file

if (File.Exists(path))

{

File.Delete(path);

}

// set the writer to the file for writing bytes

writer = new BinaryWriter(File.Open(path, FileMode.OpenOrCreate));

// retrieve the audio capture devices

var audioDevice = CaptureDeviceConfiguration.GetDefaultAudioCaptureDevice();

// assign the audioDevice to our new Custom Source object

// we picked the Default,

// you may wish to let the user select one from the list. there may be more than one

_captureSource = new CaptureSource() { AudioCaptureDevice = audioDevice };

// instantiate our audio sink

_sink = new MemoryAudioSink();

// set the selected capture source to the audio sink

_sink.CaptureSource = _captureSource;

// set the writer to the audio sink

// used to write encoded bytes to file

_sink.SetWriter = writer;

// set the quality for encoding

_sink.Quality = (Int16)Quality.Value;

// initiate the start of capture

_captureSource.Start();

// reinitialize the time span to zeros

_timeSpan = "00:00:00";

// start the timer

this.timer.Start();

// call this method to enable/disable record/play buttons on UI

StartRec();

}

private void StartRec()

{

StartRecording.IsEnabled = false;

StopRecording.IsEnabled = true;

StartPlaying.IsEnabled = false;

}

private void StopRecording_Click(object sender, RoutedEventArgs e)

{

StopRec();

}

private void StopRec()

{

// prepare the FileItem object that holds only three items below ...

FileItem fI = new FileItem();

fI.FilePath = path; // path to the encoded NSpeex audio file

fI.FileSize = writer.BaseStream.Length; // file size

fI.FileDuration = timeSpan; // audio duration

// add FileItem object to collection of FileItem

FileItems.Add(fI);

// instantiate a File List object and initialize it to the content of the above File Item

FileList fL = new FileList(FileItems);

// create a byte array of serialized object

byte[] b = WriteFromObject(fL);

// set the path to save the serialized bytes to

path = System.IO.Path.Combine(Environment.GetFolderPath(

Environment.SpecialFolder.MyDocuments), "recordedAudio.s2x");

// check its existence, delete it if it does

if (File.Exists(path))

{

File.Delete(path);

}

// create a new Binary writer to write the bytes to file

BinaryWriter fileListwriter = new BinaryWriter(File.Open(path, FileMode.OpenOrCreate));

Int32 fSize = b.Length; // get the size of the file to be written

fileListwriter.Write(fSize); // first write the size of the byte array (of the object)

fileListwriter.Write(b); // then write the actual byte array (of the object);

_captureSource.Stop(); // stop capture from the capture device

this.timer.Stop(); // stop the timer

writer.Close(); // close the writer and the underlying stream

fileListwriter.Close(); // close the other writer and the underlying stream

_sink = null; // set the audio sink to null

// reset the buttons enabled status

StartRecording.IsEnabled = true;

StopRecording.IsEnabled = false;

StartPlaying.IsEnabled = true;

}

private void StartPlaying_Click(object sender, RoutedEventArgs e)

{

// make sure the previous reader is closed

if (reader != null)

reader.Close();

// confirm a file has been selected

if (fileToPlay != "")

{

// reinitialize the reader

reader = new BinaryReader(File.Open(fileToPlay, FileMode.Open));

// reinstantiate the media stream source

_mediaSource = new CustomSourcePlayer();

//Get the media total play time

PlayTime = TimeSpan.FromSeconds(

BitConverter.ToInt32(reader.ReadBytes(4), 0) - 1).ToString();

// set the media stream source reader to the created reader

_mediaSource.SetReader = reader;

// set the mediaElement's source to the media stream source

// mediaElement will call the derived method to enquire for samples at intervals

// since it is AutoPlay is set to "True", it will start playing immediately

MediaPlayer.SetSource(_mediaSource);

// reset the buttons enabled status

StartPlaying.IsEnabled = false;

StopPlaying.IsEnabled = true;

StartRecording.IsEnabled = false;

}

}

private void StopPlaying_Click(object sender, RoutedEventArgs e)

{

MediaPlayer.Stop();

StopPlay();

}

private void MediaPlayer_MediaOpened(object sender, RoutedEventArgs e)

{

timer.Start();

}

private void MediaPlayer_MediaEnded(object sender, RoutedEventArgs e)

{

StopPlay();

}

private void StopPlay()

{

timer.Stop();

_mediaSource.cleanup();

_mediaSource = null;

StartPlaying.IsEnabled = true;

StopPlaying.IsEnabled = false;

StartRecording.IsEnabled = true;

}

// Create a object, serializ it and return its byte array

public static byte[] WriteFromObject(FileList files)

{

//Create FileList object.

FileList fileItems = files;

//Create a stream to serialize the object to.

MemoryStream ms = new MemoryStream();

// Serializer the object to the stream.

DataContractSerializer ser = new DataContractSerializer(typeof(FileList));

ser.WriteObject(ms, fileItems);

byte[] array = ms.ToArray();

ms.Close();

ms.Dispose();

return array;

}

// deserialize the object from byte array.

public static object WriteFromByte(byte[] array)

{

MemoryStream memoryStream = new MemoryStream(array);

DataContractSerializer ser = new DataContractSerializer(typeof(FileList));

object obj = ser.ReadObject(memoryStream);

return obj;

}

// get the selected file to play

private void RecordedFiles_SelectionChanged(object sender, SelectionChangedEventArgs e)

{

IList list = e.AddedItems;

fileToPlay = ((FileItem)list[0]).filePath;

}

}

}

关注点

您可能希望在附加的代码中启用日志记录,以便您可以实际感受 NSpeex 编码器为每个样本返回的编码字节的大小。 在我的例子中,第一个样本的大小与其余的编码样本不同,有时最后一个样本也会变得不同。

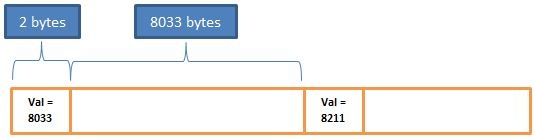

为了确保解码每个样本的正确字节数,我决定在每个样本之前插入 2 个字节,其中包含该特定编码样本的大小 ...

// get the length of the encoded Audio

encodedByteSize = (Int16)encodedAudio.Length;

// get bytes of the 'size' of the encoded Sample and write it to file

// before writing the actual bytes of the encoded Sample

writer.Write(BitConverter.GetBytes(encodedByteSize)); // <<--- the 2 bytes

// now write the encoded Bytes to file

writer.Write(encodedAudio);

// Log to file

logWriter.WriteLine(numSamples.ToString() + "\t" + encodedAudio.Length);

如果有人能弄清楚如何保存文件,使其可以在任何具有 Speex 编解码器的播放器中播放,那将非常棒,可以分享。

我希望您发现此应用程序有用。

历史

尚未更改。