SQL Server Express Edition 增强版

5.00/5 (15投票s)

如何省略 SQL Server Express Edition 的许多限制

引言

醒醒吧……你一直觉得这个世界乱七八糟。这个想法很奇怪,但却挥之不去——它就像脑子里的一个刺。你一生都活在全能的微软强加的限制和规则的牢笼里,却浑然不知。

点“不喜欢”,故事就结束了——你关闭标签页,漫无目的地在 YouTube 的推荐视频中闲逛。

但如果你想继续前进,进入一个奇幻世界,我将向你展示在 SQL Server Express 版上成功开发的兔子洞有多深……多不可能。

时不时地,我带着几分怀念回忆起职业生涯的早期……那时草坪刚粉刷过,显得格外鲜绿……那时公司管理层并不太在意各种许可条款……但时代在飞速变化,如果你想成为大公司的一员,就必须遵守市场的规则。

这枚硬币的另一面是残酷的现实——资本主义的真理是,整个业务正逐渐被迫迁移到云端,或者支付昂贵的许可证费用。但如果还有另一种选择呢?——既不需要支付许可证费用,又能自由地使用 SQL Server 商业版所有重要的优点。

现在我们甚至不是在谈论开发者版,微软在 2014 年已经将其完全免费,尽管在此之前他们乐意以 59.95 美元的价格出售。更有趣的是生产服务器的成本优化,在危机时期,客户要求最大程度地降低其业务设备成本。

毫无疑问,你现在就可以打包走人,将逻辑迁移到免费的替代品,如 PostgreSQL 或 MariaDB。但一个反问立即出现——在一切都需要“马上”完成的情况下,谁会去重写和测试这些呢?即使你下定决心尝试快速迁移一个企业项目,那么结果很可能就像玩科特·柯本最喜欢的射击游戏一样,而不是成功发布。因此,我们只能考虑如何在当前的技术限制内,充分利用 Express 版的功能。

同事医生们对 SQL Server Express 版的初步诊断:患者在一个套接字内最多只能使用 4 个逻辑核心,Buffer Pool 分配的内存略高于 1GB,数据库文件大小不能超过 10GB……感谢的是,患者至少还能勉强行动,其余的还能治。

实现

令人费解的是,首先要做的是找出我们的 SQL Server 版本。原因在于,当 SQL Server 2016 SP1 于 2018 年发布时,微软展现了慷慨的奇迹,并作为其新举措——一致的可编程性表面积(CPSA)的一部分,在功能上部分统一了所有版本。

如果以前你必须根据特定版本编写代码,那么升级到 2016 SP1(及更高版本)后,许多企业版功能都变得可用,包括 Express 版。Express 版的新功能包括:支持表和索引的分区、创建列存储索引和内存中表,以及压缩表的能力。这是 SQL Server 升级值得安装的罕见场合之一。

Express 版是否足以满足生产环境的负载需求?

为了回答这个问题,让我们尝试考虑几种场景。

我们将测试单线程 OLTP 工作负载,在不同类型的表中插入/更新/删除 200,000 行。

USE [master]

GO

SET NOCOUNT ON

SET STATISTICS IO, TIME OFF

IF DB_ID('express') IS NOT NULL BEGIN

ALTER DATABASE [express] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [express]

END

GO

CREATE DATABASE [express]

ON PRIMARY (NAME = N'express', _

FILENAME = N'X:\express.mdf', SIZE = 200 MB, FILEGROWTH = 100 MB)

LOG ON (NAME = N'express_log', FILENAME = N'X:\express_log.ldf', _

SIZE = 200 MB, FILEGROWTH = 100 MB)

ALTER DATABASE [express] SET AUTO_CLOSE OFF

ALTER DATABASE [express] SET RECOVERY SIMPLE

ALTER DATABASE [express] SET MULTI_USER

ALTER DATABASE [express] SET DELAYED_DURABILITY = ALLOWED

ALTER DATABASE [express] ADD FILEGROUP [MEM] CONTAINS MEMORY_OPTIMIZED_DATA

ALTER DATABASE [express] ADD FILE (NAME = 'MEM', FILENAME = 'X:\MEM') TO FILEGROUP [MEM]

ALTER DATABASE [express] SET MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT = ON

GO

USE [express]

GO

CREATE TABLE [T1_CL] (A INT PRIMARY KEY, B DATETIME INDEX IX1 NONCLUSTERED)

GO

CREATE TABLE [T2_MEM] (A INT PRIMARY KEY NONCLUSTERED, B DATETIME INDEX IX1 NONCLUSTERED)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

CREATE TABLE [T3_MEM_NC] (A INT PRIMARY KEY NONCLUSTERED, B DATETIME INDEX IX1 NONCLUSTERED)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

CREATE TABLE [T4_CL_DD] (A INT PRIMARY KEY, B DATETIME INDEX IX1 NONCLUSTERED)

GO

CREATE TABLE [T5_MEM_DD] (A INT PRIMARY KEY NONCLUSTERED, B DATETIME INDEX IX1 NONCLUSTERED)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

CREATE TABLE [T6_MEM_NC_DD] (A INT PRIMARY KEY NONCLUSTERED, B DATETIME INDEX IX1 NONCLUSTERED)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

CREATE TABLE [T7_MEM_SO] (A INT PRIMARY KEY NONCLUSTERED, B DATETIME INDEX IX1 NONCLUSTERED)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_ONLY)

GO

CREATE TABLE [T8_MEM_SO_NC] (A INT PRIMARY KEY NONCLUSTERED, B DATETIME INDEX IX1 NONCLUSTERED)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_ONLY)

GO

CREATE PROCEDURE [T3_MEM_I] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

INSERT INTO [dbo].[T3_MEM_NC] VALUES (@i, GETDATE())

END

GO

CREATE PROCEDURE [T3_MEM_U] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

UPDATE [dbo].[T3_MEM_NC] SET B = GETDATE() WHERE A = @i

END

GO

CREATE PROCEDURE [T3_MEM_D] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

DELETE [dbo].[T3_MEM_NC] WHERE A = @i

END

GO

CREATE PROCEDURE [T6_MEM_I] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

INSERT INTO [dbo].[T6_MEM_NC_DD] VALUES (@i, GETDATE())

END

GO

CREATE PROCEDURE [T6_MEM_U] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

UPDATE [dbo].[T6_MEM_NC_DD] SET B = GETDATE() WHERE A = @i

END

GO

CREATE PROCEDURE [T6_MEM_D] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

DELETE [dbo].[T6_MEM_NC_DD] WHERE A = @i

END

GO

CREATE PROCEDURE [T8_MEM_I] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

INSERT INTO [dbo].[T8_MEM_SO_NC] VALUES (@i, GETDATE())

END

GO

CREATE PROCEDURE [T8_MEM_U] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

UPDATE [dbo].[T8_MEM_SO_NC] SET B = GETDATE() WHERE A = @i

END

GO

CREATE PROCEDURE [T8_MEM_D] (@i INT)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

DELETE [dbo].[T8_MEM_SO_NC] WHERE A = @i

END

GO

DECLARE @i INT

, @s DATETIME

, @runs INT = 200000

DROP TABLE IF EXISTS #stats

CREATE TABLE #stats (obj VARCHAR(100), op VARCHAR(100), time_ms BIGINT)

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

INSERT INTO [T1_CL] VALUES (@i, GETDATE())

SET @i += 1

END

INSERT INTO #stats SELECT 'T1_CL', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

UPDATE [T1_CL] SET B = GETDATE() WHERE A = @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T1_CL', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

DELETE [T1_CL] WHERE A = @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T1_CL', 'DELETE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

INSERT INTO [T2_MEM] VALUES (@i, GETDATE())

SET @i += 1

END

INSERT INTO #stats SELECT 'T2_MEM', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

UPDATE [T2_MEM] SET B = GETDATE() WHERE A = @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T2_MEM', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

DELETE [T2_MEM] WHERE A = @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T2_MEM', 'DELETE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

EXEC [T3_MEM_I] @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T3_MEM_NC', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

EXEC [T3_MEM_U] @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T3_MEM_NC', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

EXEC [T3_MEM_D] @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T3_MEM_NC', 'DELETE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

INSERT INTO [T4_CL_DD] VALUES (@i, GETDATE())

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T4_CL_DD', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

UPDATE [T4_CL_DD] SET B = GETDATE() WHERE A = @i

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T4_CL_DD', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

DELETE [T4_CL_DD] WHERE A = @i

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T4_CL_DD', 'DELETE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

INSERT INTO [T5_MEM_DD] VALUES (@i, GETDATE())

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T5_MEM_DD', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

UPDATE [T5_MEM_DD] SET B = GETDATE() WHERE A = @i

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T5_MEM_DD', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

DELETE [T5_MEM_DD] WHERE A = @i

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T5_MEM_DD', 'DELETE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

EXEC [T6_MEM_I] @i

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T6_MEM_NC_DD', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

EXEC [T6_MEM_U] @i

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T6_MEM_NC_DD', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

BEGIN TRANSACTION t

EXEC [T6_MEM_D] @i

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

SET @i += 1

END

INSERT INTO #stats SELECT 'T6_MEM_NC_DD', 'DELETE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

INSERT INTO [T7_MEM_SO] VALUES (@i, GETDATE())

SET @i += 1

END

INSERT INTO #stats SELECT 'T7_MEM_SO', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

UPDATE [T7_MEM_SO] SET B = GETDATE() WHERE A = @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T7_MEM_SO', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

DELETE [T7_MEM_SO] WHERE A = @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T7_MEM_SO', 'DELETE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

EXEC [T8_MEM_I] @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T8_MEM_SO_NC', 'INSERT', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

EXEC [T8_MEM_U] @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T8_MEM_SO_NC', 'UPDATE', DATEDIFF(ms, @s, GETDATE())

SELECT @i = 1, @s = GETDATE()

WHILE @i <= @runs BEGIN

EXEC [T8_MEM_D] @i

SET @i += 1

END

INSERT INTO #stats SELECT 'T8_MEM_SO_NC', 'DELETE', DATEDIFF(ms, @s, GETDATE())

GO

SELECT obj

, [I] = MAX(CASE WHEN op = 'INSERT' THEN time_ms END)

, [U] = MAX(CASE WHEN op = 'UPDATE' THEN time_ms END)

, [D] = MAX(CASE WHEN op = 'DELETE' THEN time_ms END)

FROM #stats

GROUP BY obj

USE [master]

GO

IF DB_ID('express') IS NOT NULL BEGIN

ALTER DATABASE [express] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [express]

END

执行结果如下:

I U D

--------------- ------- ------- ------- -------------------------------------------------------

T1_CL 12173 14434 12576 B-Tree Index

T2_MEM 14774 14593 13777 In-Memory SCHEMA_AND_DATA

T3_MEM_NC 11563 10560 10097 In-Memory SCHEMA_AND_DATA + Native Compile

T4_CL_DD 5176 7294 5303 B-Tree Index + Delayed Durability

T5_MEM_DD 7460 7163 6214 In-Memory SCHEMA_AND_DATA + Delayed Durability

T6_MEM_NC_DD 8386 7494 6973 In-Memory SCHEMA_AND_DATA +

Native Compile + Delayed Durability

T7_MEM_SO 5667 5383 4473 In-Memory SCHEMA_ONLY

T8_MEM_SO_NC 3250 2430 2287 In-Memory SCHEMA_ONLY + Native Compile

集群索引(T1_CL)的表表现出我们最糟糕的结果之一。如果您查看第一个表执行期间的等待统计信息

SELECT TOP(20) wait_type

, wait_time = CAST(wait_time_ms / 1000. AS DECIMAL(18,4))

, wait_resource = _

CAST((wait_time_ms - signal_wait_time_ms) / 1000. AS DECIMAL(18,4))

, wait_signal = CAST(signal_wait_time_ms / 1000. AS DECIMAL(18,4))

, wait_time_percent = CAST(100. * wait_time_ms / _

NULLIF(SUM(wait_time_ms) OVER (), 0) AS DECIMAL(18,2))

, waiting_tasks_count

FROM sys.dm_os_wait_stats

WHERE waiting_tasks_count > 0

AND wait_time_ms > 0

AND wait_type NOT IN (

N'BROKER_EVENTHANDLER', N'BROKER_RECEIVE_WAITFOR',

N'BROKER_TASK_STOP', N'BROKER_TO_FLUSH',

N'BROKER_TRANSMITTER', N'CHECKPOINT_QUEUE',

N'CHKPT', N'CLR_AUTO_EVENT',

N'CLR_MANUAL_EVENT', N'CLR_SEMAPHORE',

N'DBMIRROR_DBM_EVENT', N'DBMIRROR_EVENTS_QUEUE',

N'DBMIRROR_WORKER_QUEUE', N'DBMIRRORING_CMD',

N'DIRTY_PAGE_POLL', N'DISPATCHER_QUEUE_SEMAPHORE',

N'EXECSYNC', N'FSAGENT',

N'FT_IFTS_SCHEDULER_IDLE_WAIT', N'FT_IFTSHC_MUTEX',

N'HADR_CLUSAPI_CALL', N'HADR_FILESTREAM_IOMGR_IOCOMPLETION',

N'HADR_LOGCAPTURE_WAIT', N'HADR_NOTIFICATION_DEQUEUE',

N'HADR_TIMER_TASK', N'HADR_WORK_QUEUE',

N'KSOURCE_WAKEUP', N'LAZYWRITER_SLEEP',

N'LOGMGR_QUEUE', N'ONDEMAND_TASK_QUEUE',

N'PWAIT_ALL_COMPONENTS_INITIALIZED',

N'QDS_PERSIST_TASK_MAIN_LOOP_SLEEP',

N'QDS_CLEANUP_STALE_QUERIES_TASK_MAIN_LOOP_SLEEP',

N'REQUEST_FOR_DEADLOCK_SEARCH', N'RESOURCE_QUEUE',

N'SERVER_IDLE_CHECK', N'SLEEP_BPOOL_FLUSH',

N'SLEEP_DBSTARTUP', N'SLEEP_DCOMSTARTUP',

N'SLEEP_MASTERDBREADY', N'SLEEP_MASTERMDREADY',

N'SLEEP_MASTERUPGRADED', N'SLEEP_MSDBSTARTUP',

N'SLEEP_SYSTEMTASK', N'SLEEP_TASK',

N'SLEEP_TEMPDBSTARTUP', N'SNI_HTTP_ACCEPT',

N'SP_SERVER_DIAGNOSTICS_SLEEP', N'SQLTRACE_BUFFER_FLUSH',

N'SQLTRACE_INCREMENTAL_FLUSH_SLEEP',

N'SQLTRACE_WAIT_ENTRIES', N'WAIT_FOR_RESULTS',

N'WAITFOR', N'WAITFOR_TASKSHUTDOWN',

N'WAIT_XTP_HOST_WAIT', N'WAIT_XTP_OFFLINE_CKPT_NEW_LOG',

N'WAIT_XTP_CKPT_CLOSE', N'XE_DISPATCHER_JOIN',

N'XE_DISPATCHER_WAIT', N'XE_TIMER_EVENT'

)

ORDER BY wait_time_ms DESC

我们会注意到最大的等待发生在 WRITELOG。

wait_type wait_time wait_resource wait_signal wait_time_percent waiting_tasks_count

-------------------------------- ---------- -------------- ------------ ------------------ --------------------

WRITELOG 13.5480 10.7500 2.7980 95.66 600048

MEMORY_ALLOCATION_EXT 0.5030 0.5030 0.0000 3.55 608695

PREEMPTIVE_OS_WRITEFILEGATHER 0.0250 0.0250 0.0000 0.18 3

ASYNC_IO_COMPLETION 0.0200 0.0200 0.0000 0.14 1

IO_COMPLETION 0.0200 0.0200 0.0000 0.14 8

打开 Paul Randal 编写的 SQL Server 等待统计信息 百科全书,并在查看 MSDN 时查找 WRITELOG。

这是日志管理系统等待日志刷新到磁盘。它通常表明 I/O 子系统无法跟上日志刷新量,但在非常高吞吐量的系统上,也可能是由内部日志刷新限制引起的,这可能意味着你需要将工作负载分散到多个数据库,甚至让事务更长一些以减少日志刷新。为了确保是 I/O 子系统的问题,请使用 DMV sys.dm_io_virtual_file_stats 来检查日志文件的 I/O 延迟,并查看它是否与平均 WRITELOG 时间相关。如果 WRITELOG 时间更长,说明存在内部争用,需要分片。如果不是,则调查你为何创建了如此多的事务日志。

我们的情况非常明显,作为 WRITELOG 等待问题的一种解决方案,可以一次性批量插入数据,而不是逐行插入。但我们对上述负载优化仅有学术兴趣,所以值得弄清楚 SQL Server 中数据是如何修改的。

假设我们正在进行行修改。SQL Server 调用存储引擎组件,然后该组件调用缓冲区管理器(它处理内存和磁盘中的缓冲区),并表示它想更改数据。之后,缓冲区管理器转向缓冲区池,并在内存中修改必要的页(如果这些页不存在,它将从磁盘加载它们,在此过程中,我们会遇到 PAGEIOLATCH_* 等待)。当内存中的页发生更改时,SQL Server 此时还不能说请求已完成。否则,ACID 原则(持久性)之一就会被违反,即在修改结束时,保证所有数据都已加载到磁盘。

在内存中的页被修改后,存储引擎调用日志管理器,将数据写入日志文件。但它不是立即执行,而是通过日志缓冲区,其大小为 60KB(存在细微差别,但我们在此略过),用于优化与日志文件的交互。当以下情况发生时,数据会从缓冲区刷新到日志文件:缓冲区已满、手动执行了 sp_flush_log、或者提交了一个事务,并且日志缓冲区中的所有内容都已写入日志文件。当数据已保存到日志文件后,就确认数据修改成功,并通知客户端。

根据这个逻辑,你会注意到数据并不是直接写入数据文件。为了优化与磁盘子系统的交互,SQL Server 使用异步机制将更改写入数据库文件。总共有两种这样的机制:惰性写入器(Lazy Writer)(定期运行,检查 SQL Server 是否有足够的内存,如果观察到内存压力,则从内存中预取页并写入数据库文件,已更改的页会被刷新到磁盘并从内存中清除)和检查点(Checkpoint)(大约每分钟扫描一次脏页,将其写入磁盘并保留在内存中)。

当系统中出现大量小事务时(例如,如果数据是逐行修改的),那么每次提交后,数据都会从日志缓冲区进入事务日志。请记住,所有更改都会同步发送到日志文件,其他事务必须排队等待——这是构建高性能系统的限制因素。

那么,解决这个问题的替代方案是什么?

在 SQL Server 2014 中,可以创建 内存中表,开发人员声称它们可以通过新的 Hekaton 引擎显著加速 OLTP 工作负载。但如果您看上面的例子(T2_MEM),内存中表的单线程性能甚至比具有集群索引的传统表(T1_CL)还要差——这是由于 XTP_PREEMPTIVE_TASK 进程在后台将内存中表的较大更改提交到日志文件(并且如实践所示,它们做得并不太好)。

事实上,内存中的全部意义在于改进的并发访问机制和减少数据修改时的锁。在这种场景下,它们的使用确实会带来惊人的结果,但它们不应被用于简单的 CRUD 操作。

在进一步尝试加速内存中表时,我们看到了类似的情况,即在它们之上封装 原生编译 的存储过程(T3_MEM_NC),它们在进行一些计算和迭代数据处理时完美地优化了性能,但作为 CRUD 操作的封装器,它们表现平平,只会减轻实际调用时的负载。

总的来说,我一直不喜欢内存中表和原生编译存储——SQL Server 2014/2016 中与它们相关的 Bug 太多了。有些问题已经修复,有些已经改进,但你仍然需要非常谨慎地使用这项技术。例如,在创建了内存中文件组之后,你不能简单地删除它而不重新创建目标数据库。一切都会好起来,但有时即使你只更新内存中表中的几行,这个文件组也会增长到几个 GB……如果我们谈论的是生产环境,我不会在主数据库中使用这项技术。

完全不同的是启用 延迟持久性 选项,该选项允许你在提交事务时不必立即将数据保存到日志文件,而是等到累积了 60KB 的更改。这可以通过针对所选数据库的所有事务级别的强制设置来完成。

ALTER DATABASE TT SET DELAYED_DURABILITY = FORCED

或者在单个事务中。

ALTER DATABASE TT SET DELAYED_DURABILITY = ALLOWED

GO

BEGIN TRANSACTION t

...

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

使用此选项的优势在 T4_CL_DD 的示例中(比 T1_CL 快 2.5 倍)得到了清晰的展示。启用此选项当然也有一些缺点,当在系统故障或断电的成功巧合下,你可能会丢失大约 60KB 的数据。

我认为你不应该强加你的观点,因为在每种情况下,都需要权衡利弊。但我会补充一点,启用延迟持久性在我不得不紧急卸载 OLTP 负载下的磁盘子系统时,曾不止一次地挽救了我。

现在,我们来谈谈最有趣的部分——如何最大程度地加快 OLTP 操作的速度?答案在于正确使用内存中表。在此之前,我已经相当批评它们了,但所有性能问题都只与以 SCHEMA_AND_DATA 创建的表有关(当数据同时存储在 RAM 和磁盘中时)。但是,如果你以 SCHEMA_ONLY 选项创建内存中表,那么数据将只存储在 RAM 中……缺点是,当 SQL Server 重启时,这些表中的数据将丢失。此外,与普通表(T8_MEM_SO/T8_MEM_SO_NC)相比,它能够将数据修改操作的速度提高 4 倍。

为了说明我的工作流程,我创建了一个中间数据库,其中有一个内存中的 SCHEMA_ONLY 表(我们将所有操作封装在原生编译过程中),数据以最大速度持续地流入其中,然后我们将其分流到主数据库中进行永久存储。此外,内存中的 SCHEMA_ONLY 表非常适合作为 ETL 加载的中间缓冲区,因为它们不会给磁盘子系统带来任何负载。

现在让我们转向 DW 工作负载,其特点是涉及提取大量数据的分析查询。

为了做到这一点,让我们创建几个具有不同压缩选项的表并进行实验。

USE [master]

GO

SET NOCOUNT ON

SET STATISTICS IO, TIME OFF

IF DB_ID('express') IS NOT NULL BEGIN

ALTER DATABASE [express] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [express]

END

GO

CREATE DATABASE [express]

ON PRIMARY (NAME = N'express', FILENAME = N'X:\express.mdf', _

SIZE = 200 MB, FILEGROWTH = 100 MB)

LOG ON (NAME = N'express_log', FILENAME = N'X:\express_log.ldf', _

SIZE = 200 MB, FILEGROWTH = 100 MB)

ALTER DATABASE [express] SET AUTO_CLOSE OFF

ALTER DATABASE [express] SET RECOVERY SIMPLE

ALTER DATABASE [express] SET DELAYED_DURABILITY = FORCED

GO

USE [express]

GO

DROP TABLE IF EXISTS [T1_HEAP]

CREATE TABLE [T1_HEAP] (

[INT] INT NOT NULL

, [VARCHAR] VARCHAR(100) NOT NULL

, [DATETIME] DATETIME NOT NULL

)

GO

;WITH E1(N) AS (SELECT * FROM (VALUES (1),(1),(1),(1),(1),(1),(1),(1),(1),(1)) t(N))

, E2(N) AS (SELECT '1' FROM E1 a, E1 b)

, E4(N) AS (SELECT '1' FROM E2 a, E2 b)

, E8(N) AS (SELECT '1' FROM E4 a, E4 b)

INSERT INTO [T1_HEAP] WITH(TABLOCK) ([INT], [VARCHAR], [DATETIME])

SELECT TOP(5000000)

ROW_NUMBER() OVER (ORDER BY 1/0)

, CAST(ROW_NUMBER() OVER (ORDER BY 1/0) AS VARCHAR(100))

, DATEADD(DAY, ROW_NUMBER() OVER (ORDER BY 1/0) % 100, '20180101')

FROM E8

GO

DROP TABLE IF EXISTS [T2_CL]

SELECT * INTO [T2_CL] FROM [T1_HEAP] WHERE 1=0

CREATE CLUSTERED INDEX IX ON [T2_CL] ([INT]) WITH (DATA_COMPRESSION = NONE)

INSERT INTO [T2_CL] WITH(TABLOCK)

SELECT * FROM [T1_HEAP]

GO

DROP TABLE IF EXISTS [T3_CL_ROW]

SELECT * INTO [T3_CL_ROW] FROM [T2_CL] WHERE 1=0

CREATE CLUSTERED INDEX IX ON [T3_CL_ROW] ([INT]) WITH (DATA_COMPRESSION = ROW)

INSERT INTO [T3_CL_ROW] WITH(TABLOCK)

SELECT * FROM [T2_CL]

GO

DROP TABLE IF EXISTS [T4_CL_PAGE]

SELECT * INTO [T4_CL_PAGE] FROM [T2_CL] WHERE 1=0

CREATE CLUSTERED INDEX IX ON [T4_CL_PAGE] ([INT]) WITH (DATA_COMPRESSION = PAGE)

INSERT INTO [T4_CL_PAGE] WITH(TABLOCK)

SELECT * FROM [T2_CL]

GO

DROP TABLE IF EXISTS [T5_CCI]

SELECT * INTO [T5_CCI] FROM [T2_CL] WHERE 1=0

CREATE CLUSTERED COLUMNSTORE INDEX IX ON [T5_CCI] WITH (DATA_COMPRESSION = COLUMNSTORE)

INSERT INTO [T5_CCI] WITH(TABLOCK)

SELECT * FROM [T2_CL]

GO

DROP TABLE IF EXISTS [T6_CCI_ARCHIVE]

SELECT * INTO [T6_CCI_ARCHIVE] FROM [T2_CL] WHERE 1=0

CREATE CLUSTERED COLUMNSTORE INDEX IX ON [T6_CCI_ARCHIVE] _

WITH (DATA_COMPRESSION = COLUMNSTORE_ARCHIVE)

INSERT INTO [T6_CCI_ARCHIVE] WITH(TABLOCK)

SELECT * FROM [T2_CL]

GO

首先要注意的是表的最终大小。

SELECT o.[name]

, i.[rows]

, i.[type_desc]

, total_mb = CAST(i.total_pages * 8. / 1024 AS DECIMAL(18,2))

FROM sys.objects o

JOIN (

SELECT i.[object_id]

, a.[type_desc]

, total_pages = SUM(a.total_pages)

, [rows] = SUM(CASE WHEN i.index_id IN (0,1) THEN p.[rows] END)

FROM sys.indexes i

JOIN sys.partitions p ON i.[object_id] = p.[object_id] AND i.index_id = p.index_id

JOIN sys.allocation_units a ON p.[partition_id] = a.container_id

WHERE a.total_pages > 0

GROUP BY i.[object_id]

, a.[type_desc]

) i ON o.[object_id] = i.[object_id]

WHERE o.[type] = 'U'

由于 Express 版可以使用压缩和列存储索引,因此出现了很多选项,可以在不显着损失性能的情况下,将更多信息存储在数据库文件允许的 10GB 限制内。

rows type_desc total_mb

--------------- -------- ------------ ---------

T1_HEAP 5000000 IN_ROW_DATA 153.38

T2_CL 5000000 IN_ROW_DATA 163.45

T3_CL_ROW 5000000 IN_ROW_DATA 110.13

T4_CL_PAGE 5000000 IN_ROW_DATA 72.63

T5_CCI 5000000 LOB_DATA 81.20

T6_CCI_ARCHIVE 5000000 LOB_DATA 41.13

如果我们开始讨论数据压缩,那么行压缩(ROW compression)会将值截断到可能的最小固定类型而不丢失数据;页压缩(PAGE compression)则在行压缩的基础上,在页面级别以二进制形式进一步压缩数据。在这种形式下,页面同时存储在磁盘和缓冲区池中,只有在直接访问数据时,才会即时进行解压缩。

使用压缩的明显好处在于减少了磁盘 I/O 操作和缓冲区池中用于数据存储的内存量——如果我们有慢速磁盘、少量 RAM 和相对未被充分利用的处理器,这一点尤其重要。压缩的缺点是额外的处理器负载,但并不像“被微软像麻风病人一样抛弃”那样严重,以至于完全忽略这一功能。

使用列存储索引看起来非常有趣,它可以显著压缩数据并提高分析查询的性能。让我们快速了解一下它们是如何工作的……由于它是列式存储模型,表中的数据被分成大约一百万行的 RowGroup(总数可能与数据插入表的方式不同),然后在 RowGroup 中,每列都以段的形式表示,并被压缩成具有自身元信息的 LOB 对象(例如,它存储压缩序列中的最小值和最大值)。

与 PAGE / ROW 压缩不同,列存储索引根据目标列的数据类型使用不同的压缩选项——这可以是值缩放、字典压缩、位数组打包以及其他各种选项(游程编码、霍夫曼编码、二进制压缩、LZ77)。结果是,我们可以更优化地压缩每一列。

你可以通过此查询查看一个或另一个 RowGroup 是如何压缩的。

SELECT o.[name]

, row_group_id

, state_description

, total_rows

, size_mb = CAST(size_in_bytes / (1024. * 1024) AS DECIMAL(18,2))

, total_mb = CAST(SUM(size_in_bytes) OVER _

(PARTITION BY i.[object_id]) / 8192 * 8. / 1024 AS DECIMAL(18,2))

FROM sys.indexes i

JOIN sys.objects o ON i.[object_id] = o.[object_id]

CROSS APPLY sys.fn_column_store_row_groups(i.[object_id]) s

WHERE i.[type] IN (5, 6)

AND i.[object_id] = OBJECT_ID('T5_CCI')

ORDER BY i.[object_id]

, s.row_group_id

row_group_id state_description total_rows deleted_rows size_mb total_mb

------------- ------------------ ----------- ------------- -------- ---------

0 COMPRESSED 593581 0 3.78 31.80

1 COMPRESSED 595539 0 3.79 31.80

2 COMPRESSED 595539 0 3.79 31.80

3 COMPRESSED 599030 0 3.81 31.80

4 COMPRESSED 595539 0 3.79 31.80

5 COMPRESSED 686243 0 4.37 31.80

6 COMPRESSED 595539 0 3.79 31.80

7 COMPRESSED 738990 0 4.70 31.80

请注意一个细微之处,它可能会极大地影响 Express 版中列存储索引的使用性能。由于段和字典(解压缩发生的基础)存储在磁盘上的不同结构中,因此所有字典的大小都适合内存(在 Express 版中,这最多需要 350MB)至关重要。

SELECT [column] = COL_NAME(p.[object_id], s.column_id)

, s.dictionary_id

, s.entry_count

, size_mb = CAST(s.on_disk_size / (1024. * 1024) AS DECIMAL(18,2))

, total_mb = CAST(SUM(s.on_disk_size) OVER () / 8192 * 8. / 1024 AS DECIMAL(18,2))

FROM sys.column_store_dictionaries s

JOIN sys.partitions p ON p.hobt_id = s.hobt_id

WHERE p.[object_id] = OBJECT_ID('T5_CCI')

column dictionary_id entry_count size_mb total_mb

---------- ------------- ------------ -------- ----------

VARCHAR 1 593581 6.39 53.68

VARCHAR 2 738990 7.98 53.68

VARCHAR 3 686243 7.38 53.68

VARCHAR 4 595539 6.37 53.68

VARCHAR 5 595539 6.39 53.68

VARCHAR 6 595539 6.38 53.68

VARCHAR 7 595539 6.39 53.68

VARCHAR 8 599030 6.40 53.68

DATETIME 1 100 0.00 53.68

DATETIME 2 100 0.00 53.68

DATETIME 3 100 0.00 53.68

DATETIME 4 100 0.00 53.68

DATETIME 5 100 0.00 53.68

DATETIME 6 100 0.00 53.68

DATETIME 7 100 0.00 53.68

DATETIME 8 100 0.00 53.68

同时,段可以根据需要从磁盘加载,并且几乎不会影响处理器负载。

SELECT [column] = COL_NAME(p.[object_id], s.column_id)

, s.segment_id

, s.row_count

, CAST(s.on_disk_size / (1024. * 1024) AS DECIMAL(18,2))

FROM sys.column_store_segments s

JOIN sys.partitions p ON p.hobt_id = s.hobt_id

WHERE p.[object_id] = OBJECT_ID('T5_CCI')

column segment_id row_count size_mb total_mb

---------- ----------- ----------- -------- ---------

INT 0 593581 2.26 31.80

INT 1 595539 2.27 31.80

INT 2 595539 2.27 31.80

INT 3 599030 2.29 31.80

INT 4 595539 2.27 31.80

INT 5 686243 2.62 31.80

INT 6 595539 2.27 31.80

INT 7 738990 2.82 31.80

VARCHAR 0 593581 1.51 31.80

VARCHAR 1 595539 1.52 31.80

VARCHAR 2 595539 1.52 31.80

VARCHAR 3 599030 1.52 31.80

VARCHAR 4 595539 1.52 31.80

VARCHAR 5 686243 1.75 31.80

VARCHAR 6 595539 1.52 31.80

VARCHAR 7 738990 1.88 31.80

DATETIME 0 593581 0.01 31.80

DATETIME 1 595539 0.01 31.80

DATETIME 2 595539 0.01 31.80

DATETIME 3 599030 0.01 31.80

DATETIME 4 595539 0.01 31.80

DATETIME 5 686243 0.01 31.80

DATETIME 6 595539 0.01 31.80

DATETIME 7 738990 0.01 31.80

请注意,RowGroup 段中的唯一记录越少,字典的大小就越小。列存储的分区以及将数据插入到正确的部分并使用 TABLOCK 提示将导致更小的本地字典,这意味着它将减少列存储索引的使用开销。实际上,优化字典最简单的方法是数据本身——列中的唯一数据越少越好(在 DATETIME 的例子中可以看到)。

由于列式模型,只会提取我们请求的列,并且额外的过滤器可以由于上述元信息而限制 RowGroup 的提取。结果,我们得到了一种伪索引的类似物,它同时存在于所有列上,这使得我们可以非常快速地进行聚合和过滤……再次,它也有其自身的细微差别。

让我们看几个例子来展示列存储索引的好处。

DBCC DROPCLEANBUFFERS

SET STATISTICS IO, TIME ON

SELECT COUNT(*), MIN([DATETIME]), MAX([INT]) FROM [T1_HEAP]

SELECT COUNT(*), MIN([DATETIME]), MAX([INT]) FROM [T2_CL]

SELECT COUNT(*), MIN([DATETIME]), MAX([INT]) FROM [T3_CL_ROW]

SELECT COUNT(*), MIN([DATETIME]), MAX([INT]) FROM [T4_CL_PAGE]

SELECT COUNT(*), MIN([DATETIME]), MAX([INT]) FROM [T5_CCI]

SELECT COUNT(*), MIN([DATETIME]), MAX([INT]) FROM [T6_CCI_ARCHIVE]

SET STATISTICS IO, TIME OFF

俗话说,感受区别。

Table 'T1_HEAP'. Scan count 1, logical reads 19633, ...

CPU time = 391 ms, elapsed time = 400 ms.

Table 'T2_CL'. Scan count 1, logical reads 20911, ...

CPU time = 312 ms, elapsed time = 391 ms.

Table 'T3_CL_ROW'. Scan count 1, logical reads 14093, ...

CPU time = 485 ms, elapsed time = 580 ms.

Table 'T4_CL_PAGE'. Scan count 1, logical reads 9286, ...

CPU time = 828 ms, elapsed time = 1000 ms.

Table 'T5_CCI'. Scan count 1, ..., lob logical reads 5122, ...

CPU time = 8 ms, elapsed time = 14 ms.

Table 'T6_CCI_ARCHIVE'. Scan count 1, ..., lob logical reads 2576, ...

CPU time = 78 ms, elapsed time = 74 ms.

在过滤时,可能会出现一些不太好的细微差别。

DBCC DROPCLEANBUFFERS

SET STATISTICS IO, TIME ON

SELECT * FROM [T5_CCI] WHERE [INT] = 1

SELECT * FROM [T5_CCI] WHERE [DATETIME] = GETDATE()

SELECT * FROM [T5_CCI] WHERE [VARCHAR] = '1'

SET STATISTICS IO, TIME OFF

原因是,对于某些数据类型(NUMERIC, DATETIMEOFFSET, [N] CHAR, [N] VARCHAR, VARBINARY, UNIQUEIDENTIFIER, XML),行组消除 不受支持。

Table 'T5_CCI'. Scan count 1, ..., lob logical reads 2713, ...

Table 'T5_CCI'. Segment reads 1, segment skipped 7.

CPU time = 15 ms, elapsed time = 9 ms.

Table 'T5_CCI'. Scan count 1, ..., lob logical reads 0, ...

Table 'T5_CCI'. Segment reads 0, segment skipped 8.

CPU time = 0 ms, elapsed time = 0 ms.

Table 'T5_CCI'. Scan count 1, ..., lob logical reads 22724, ...

Table 'T5_CCI'. Segment reads 8, segment skipped 0.

CPU time = 547 ms, elapsed time = 669 ms.

在某些情况下,优化器存在明显的缺陷,这让人联想到 SQL Server 2008R2 中的一个旧 bug(预聚合比以更简洁的方式编写的聚合更快)。

DBCC DROPCLEANBUFFERS

SET STATISTICS IO, TIME ON

SELECT EOMONTH([DATETIME]), Cnt = SUM(Cnt)

FROM (

SELECT [DATETIME], Cnt = COUNT(*)

FROM [T5_CCI]

GROUP BY [DATETIME]

) t

GROUP BY EOMONTH([DATETIME])

SELECT EOMONTH([DATETIME]), Cnt = COUNT(*)

FROM [T5_CCI]

GROUP BY EOMONTH([DATETIME])

SET STATISTICS IO, TIME OFF

坦白说,这种情况比比皆是。

Table 'T5_CCI'. Scan count 1, ..., lob logical reads 64, ...

CPU time = 0 ms, elapsed time = 2 ms.

Table 'T5_CCI'. Scan count 1, ..., lob logical reads 32, ...

CPU time = 344 ms, elapsed time = 380 ms.

本文无法全部涵盖,但您需要了解的许多内容都可以在这个 博客 中找到。我强烈推荐这个资源来深入了解列存储索引的主题!

如果功能或多或少清晰,并且我希望通过上面的例子能够说服你,它们通常不是 Express 版全面开发的限制因素。但资源限制呢……我只能说每个具体情况都单独处理。

Express 版允许每个实例使用 4 个核心,但是什么阻止我们部署多个实例在一个服务器上(例如,一个有 16 个核心的服务器),为每个实例分配固定的物理核心,并获得一个经济高效的可扩展系统的模拟,特别是在微服务架构的情况下——当每个服务都处理其数据库的副本时。

缺少 1GB 的缓冲区池?也许应该通过优化查询和引入分区、列存储索引或简单地压缩表中的数据来最小化物理读取。如果这不可行,那么迁移到更快的 RAID。

但数据库文件最大 10GB 的限制呢?当我们尝试将其增加到超出指定值时,会收到错误。

有几种方法可以解决这个问题。

我们可以创建几个数据库,它们将包含我们自己的历史数据部分。对于这些表中的每一个,我们将设置一个约束,然后在一个视图中合并所有这些表。这将为我们提供单个实例内的水平分片。

USE [master]

GO

SET NOCOUNT ON

SET STATISTICS IO, TIME OFF

IF DB_ID('DB_2019') IS NOT NULL BEGIN

ALTER DATABASE [DB_2019] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [DB_2019]

END

GO

IF DB_ID('DB_2020') IS NOT NULL BEGIN

ALTER DATABASE [DB_2020] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [DB_2020]

END

GO

IF DB_ID('DB_2021') IS NOT NULL BEGIN

ALTER DATABASE [DB_2021] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [DB_2021]

END

GO

IF DB_ID('DB') IS NOT NULL BEGIN

ALTER DATABASE [DB] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [DB]

END

GO

CREATE DATABASE [DB_2019]

ALTER DATABASE [DB_2019] SET AUTO_CLOSE OFF, RECOVERY SIMPLE

CREATE DATABASE [DB_2020]

ALTER DATABASE [DB_2020] SET AUTO_CLOSE OFF, RECOVERY SIMPLE

CREATE DATABASE [DB_2021]

ALTER DATABASE [DB_2021] SET AUTO_CLOSE OFF, RECOVERY SIMPLE

CREATE DATABASE [DB]

ALTER DATABASE [DB] SET AUTO_CLOSE OFF, RECOVERY SIMPLE

GO

USE [DB_2019]

GO

CREATE TABLE [T_2019] ([A] DATETIME, [B] INT, PRIMARY KEY ([A], [B]))

ALTER TABLE [T_2019] WITH CHECK ADD CONSTRAINT [T_CK]

CHECK ([A] >= '20190101' AND [A] < '20200101')

GO

INSERT INTO [T_2019] VALUES ('20190101', 1), ('20190201', 2), ('20190301', 3)

GO

USE [DB_2020]

GO

CREATE TABLE [T_2020] ([A] DATETIME, [B] INT, PRIMARY KEY ([A], [B]))

ALTER TABLE [T_2020] WITH CHECK ADD CONSTRAINT [T_CK]

CHECK ([A] >= '20200101' AND [A] < '20210101')

GO

INSERT INTO [T_2020] VALUES ('20200401', 4), ('20200501', 5), ('20200601', 6)

GO

USE [DB_2021]

GO

CREATE TABLE [T_2021] ([A] DATETIME, [B] INT, PRIMARY KEY ([A], [B]))

ALTER TABLE [T_2021] WITH CHECK ADD CONSTRAINT [T_CK]

CHECK ([A] >= '20210101' AND [A] < '20220101')

GO

INSERT INTO [T_2021] VALUES ('20210701', 7), ('20210801', 8), ('20210901', 9)

GO

USE [DB]

GO

CREATE SYNONYM [dbo].[T_2019] FOR [DB_2019].[dbo].[T_2019]

CREATE SYNONYM [dbo].[T_2020] FOR [DB_2020].[dbo].[T_2020]

CREATE SYNONYM [dbo].[T_2021] FOR [DB_2021].[dbo].[T_2021]

GO

CREATE VIEW [T]

AS

SELECT * FROM [dbo].[T_2019]

UNION ALL

SELECT * FROM [dbo].[T_2020]

UNION ALL

SELECT * FROM [dbo].[T_2021]

GO

当在设置了限制的列上进行过滤时,我们只会读取我们需要的数据。

SELECT COUNT(*) FROM [T] WHERE [A] > '20200101'

这可以在执行计划或统计信息中看到。

Table 'T_2021'. Scan count 1, logical reads 2, ...

Table 'T_2020'. Scan count 1, logical reads 2, ...

此外,由于限制,我们可以在视图内透明地修改数据。

INSERT INTO [T] VALUES ('20210101', 999)

UPDATE [T] SET [B] = 1 WHERE [A] = '20210101'

DELETE FROM [T] WHERE [A] = '20210101'

Table 'T_2021'. Scan count 0, logical reads 2, ...

Table 'T_2021'. Scan count 1, logical reads 6, ...

Table 'T_2020'. Scan count 0, logical reads 0, ...

Table 'T_2019'. Scan count 0, logical reads 0, ...

Table 'T_2021'. Scan count 1, logical reads 2, ...

Table 'T_2020'. Scan count 0, logical reads 0, ...

Table 'T_2019'. Scan count 0, logical reads 0, ...

采用这种方法,我们可以部分解决问题,但每个单独的数据库仍然会受到 10GB 的限制。

另一个选项是专门为架构怪癖爱好者发明的——由于数据文件的大小限制不适用于系统数据库(master, msdb, model and tempdb),因此所有的开发都可以放在这些数据库中进行。但大多数情况下,将系统数据库用作用户数据库的做法就像用火箭筒射击自己的脚。因此,我甚至不会详细说明这种决定的所有陷阱,但如果你仍然非常想这样做,这肯定会保证你迅速将粗俗词汇的水平提升到拥有 30 年经验的工头级别。

现在让我们继续解决这个问题的实际方案。

我们在开发者版上创建一个所需大小的数据库,然后将其分离。

USE [master]

GO

IF DB_ID('express') IS NOT NULL BEGIN

ALTER DATABASE [express] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [express]

END

GO

CREATE DATABASE [express]

GO

ALTER DATABASE [express] MODIFY FILE (NAME = N'express', SIZE = 20 GB)

ALTER DATABASE [express] MODIFY FILE (NAME = N'express_log', SIZE = 100 MB)

ALTER DATABASE [express] SET DISABLE_BROKER

GO

EXEC [master].dbo.sp_detach_db @dbname = N'express'

GO

在 Express 版上创建同名的数据库,然后停止服务。

USE [master]

GO

IF DB_ID('express') IS NOT NULL BEGIN

ALTER DATABASE [express] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [express]

END

GO

CREATE DATABASE [express]

GO

ALTER DATABASE [express] MODIFY FILE (NAME = N'express', SIZE = 100 MB)

ALTER DATABASE [express] MODIFY FILE (NAME = N'express_log', SIZE = 100 MB)

ALTER DATABASE [express] SET DISABLE_BROKER

GO

我们将数据库文件从开发者版移动到 Express 版中同名数据库的位置,替换部分文件。启动 SQL Server Express 版实例。

检查我们的数据库大小。

SET NOCOUNT ON

SET ARITHABORT ON

SET NUMERIC_ROUNDABORT OFF

SET STATISTICS IO, TIME OFF

IF OBJECT_ID('tempdb.dbo.#database_files') IS NOT NULL

DROP TABLE #database_files

CREATE TABLE #database_files (

[db_id] INT DEFAULT DB_ID()

, [name] SYSNAME

, [type] INT

, [size_mb] BIGINT

, [used_size_mb] BIGINT

)

DECLARE @sql NVARCHAR(MAX) = STUFF((

SELECT '

USE ' + QUOTENAME([name]) + '

INSERT INTO #database_files ([name], [type], [size_mb], [used_size_mb])

SELECT [name]

, [type]

, CAST([size] AS BIGINT) * 8 / 1024

, CAST(FILEPROPERTY([name], ''SpaceUsed'') AS BIGINT) * 8 / 1024

FROM sys.database_files WITH(NOLOCK);'

FROM sys.databases WITH(NOLOCK)

WHERE [state] = 0

AND ISNULL(HAS_DBACCESS([name]), 0) = 1

FOR XML PATH(''), TYPE).value('(./text())[1]', 'NVARCHAR(MAX)'), 1, 2, '')

EXEC sys.sp_executesql @sql

SELECT [db_id] = d.[database_id]

, [db_name] = d.[name]

, [state] = d.[state_desc]

, [total_mb] = s.[data_size] + s.[log_size]

, [data_mb] = s.[data_size]

, [data_used_mb] = s.[data_used_size]

, [data_free_mb] = s.[data_size] - s.[data_used_size]

, [log_mb] = s.[log_size]

, [log_used_mb] = s.[log_used_size]

, [log_free_mb] = s.[log_size] - s.[log_used_size]

FROM sys.databases d WITH(NOLOCK)

LEFT JOIN (

SELECT [db_id]

, [data_size] = SUM(CASE WHEN [type] = 0 THEN [size_mb] END)

, [data_used_size] = SUM(CASE WHEN [type] = 0 THEN [used_size_mb] END)

, [log_size] = SUM(CASE WHEN [type] = 1 THEN [size_mb] END)

, [log_used_size] = SUM(CASE WHEN [type] = 1 THEN [used_size_mb] END)

FROM #database_files

GROUP BY [db_id]

) s ON d.[database_id] = s.[db_id]

ORDER BY [total_mb] DESC

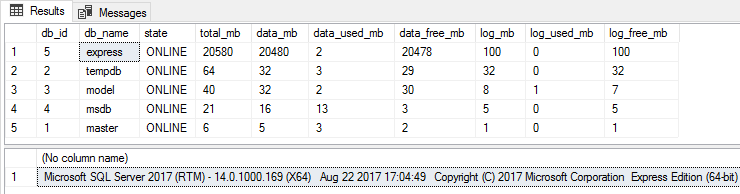

瞧!现在数据库文件的大小超出了限制,并且数据库功能齐全!

你仍然可以像以前一样对其进行收缩、创建备份或更改此数据库的设置。只有在需要从备份中恢复数据库或再次增加数据库文件大小时才会出现困难。在这种情况下,我们可以将备份恢复到开发者版,增加到所需大小,然后按照上述步骤替换文件。

结论

总而言之,SQL Server Express 版常常因为资源限制和其他各种借口而被不公正地忽视。本文的主要信息是,你可以在任何版本的 SQL Server 上设计一个高性能的系统。

感谢大家的关注!

历史

- 2021 年 3 月 13 日:初始版本