TrackEye:使用网络摄像头实时追踪人眼

4.95/5 (110投票s)

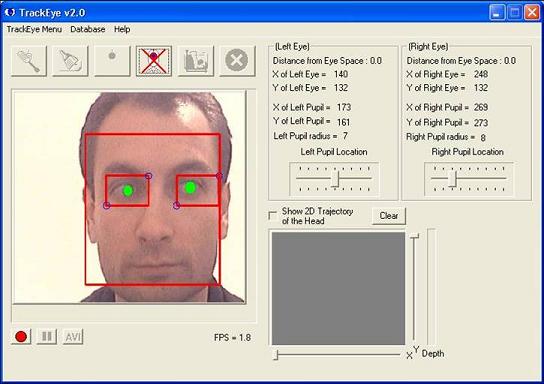

使用网络摄像头在视频序列中实时追踪人眼,

引言

眼睛是人脸最重要的特征。因此,在用户与计算机界面中有效利用眼球运动作为一种交流技术,可以在各种应用领域找到用武之地。

眼球跟踪以及眼球特征提供的信息有潜力成为人机交互(HCI)系统中与计算机交流的有趣方式。因此,在这样的动机下,设计一个实时眼球特征跟踪软件是本项目的目标。

本项目旨在实现一个具有以下功能的实时眼部特征跟踪器

- 实时人脸跟踪,具有尺度和旋转不变性

- 单独跟踪眼部区域

- 跟踪眼部特征

- 查找注视方向

- 使用眼球运动进行远程控制

运行和重新构建 TrackEye 的说明

安装说明

- 解压TrackEye_Executable.zip文件。在运行TrackEye_636.exe之前,将SampleHUE.jpg和SampleEye.jpg两个文件复制到C:\文件夹。这两个文件用于CAMSHIFT和模板匹配算法。

- 用户运行软件无需遵循其他步骤。没有DLL依赖项,因为软件是使用静态链接的DLL构建的。

要实现良好的跟踪需要进行的设置

人脸和眼睛检测设置

在 TrackEye 菜单 --> Tracker Settings 下

- 输入源:视频

- 点击 Select file 并选择..\Avis\Sample.avi

- 人脸检测算法:Haar 人脸检测算法

- 勾选“Track also Eyes”复选框

- 眼睛检测算法:自适应 PCA

- 取消勾选“Variance Check”

- 数据库图像数量:8

- EigenEyes 数量:5

- 眼睛空间允许的最大距离:1200

- 人脸宽度/眼部模板宽度比:0.3

- PCA 过程中使用的

ColorSpace类型:CV_RGB2GRAY

瞳孔检测设置

勾选“Track eyes in details”,然后勾选“Detect also eye pupils”。点击“Adjust Parameters”按钮

- 在“Threshold Value”中输入“120”

- 点击“Save Settings”,然后点击“Close”

Snake 设置

勾选“Indicate eye boundary using active snakes”。点击“Settings for snake”按钮

- 选择使用的

ColorSpace:CV_RGB2GRAY - 选择 Simple thresholding 并输入 100 作为“Threshold value”

- 点击“Save Settings”,然后点击“Close”

背景

到目前为止,在眼球检测方面已经做了大量工作,在项目之前,对先前的方法进行了仔细研究,以确定实现的方法。我们可以将与眼球相关的研究分为以下两类:

基于专用设备的方法

这类研究使用必要的设备,这些设备将发出与眼球在眼眶中位置成比例的某种信号。目前使用的各种方法包括眼电图、红外眼动仪、巩膜搜索线圈。这些方法完全不在我们项目的范围内。

基于图像的方法

基于图像的方法在图像上执行眼球检测。大多数基于图像的方法都试图利用眼球的特征来检测眼球。迄今为止使用的方法包括基于知识的方法、基于特征的方法(颜色、梯度)、简单的模板匹配、外观方法。另一种有趣的方法是“可变形模板匹配”,它基于通过最小化几何模型的能量来匹配眼球的几何模板。

TrackEye 的实现

实现的项目的三个组成部分

- 人脸检测:执行尺度不变人脸检测

- 眼球检测:此步骤的结果是检测到双眼

- 眼部特征提取:在此步骤结束时提取眼部特征

人脸检测

项目中实现了两种不同的方法。它们是

- 连续自适应均值漂移算法

- Haar 人脸检测方法

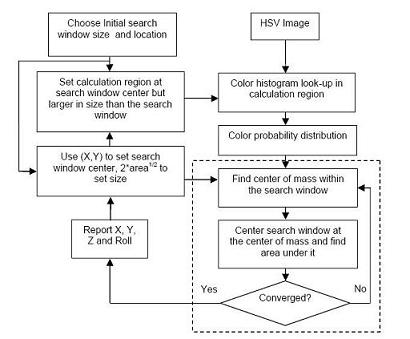

连续自适应均值漂移算法

自适应均值漂移算法用于跟踪人脸,它基于鲁棒的非参数技术,通过爬升密度梯度来寻找概率分布的模式(峰值),即均值漂移算法。随着视频序列中人脸的跟踪,均值漂移算法被修改以处理动态变化的颜色概率分布问题。算法的框图如下所示:

Haar-人脸检测方法

第二种人脸检测算法基于一个使用 Haar 类特征的分类器(即使用 Haar 类特征的增强分类器级联)。首先,它使用几百个正面样本视图进行训练。在训练好分类器后,可以将其应用于输入图像中的感兴趣区域。如果该区域可能显示人脸,则分类器输出“1”,否则输出“0”。为了在整个图像中搜索对象,可以将搜索窗口在图像上移动,并使用分类器检查每个位置。该分类器设计得可以轻松地“调整大小”,以便能够以不同的大小找到感兴趣的对象,这比调整图像本身的大小更有效。

眼球检测

项目中实现了两种不同的方法

- 模板匹配

- 自适应

EigenEye方法

模板匹配

模板匹配是一种众所周知的对象检测方法。在我们的模板匹配方法中,手动创建了一个标准的眼部图案,并给定输入图像,为眼部计算与标准图案的相关值。眼部的存在是根据相关值确定的。这种方法具有易于实现的优点。然而,它有时可能不足以进行眼球检测,因为它无法有效处理尺度、姿势和形状的变化。

自适应 EigenEye 方法

自适应EigenEye方法基于著名的EigenFaces方法。但是,由于该方法用于眼球检测,我们称之为“EigenEye方法”。主要思想是将眼球图像分解为一小组特征图像,称为 eigeneyes,这可以被认为是原始图像的主成分。这些 eigeneyes 作为称为 eyespace 的子空间的正交基向量。然而,我们知道eigenface方法不是尺度不变的。为了提供尺度不变性,我们可以使用人脸检测算法收集的信息(EyeWidth / FaceWidth ? 0.35)一次调整眼球数据库的大小,我们就可以使用一个数据库实现尺度不变检测。

用于对象跟踪和检测的 OpenCV 函数

OpenCV库提供了大量的图像处理和对象跟踪与检测库。项目中使用的主要函数及其用法如下:

Haar-人脸跟踪示例代码

void CTrackEyeDlg::HaarFaceDetect( IplImage* img, CvBox2D* faceBox)

{

int scale = 2;

IplImage* temp = cvCreateImage( cvSize(img->width/2,img->height/2), 8, 3 );

CvPoint pt1, pt2;

int i;

cvPyrDown( img, temp, CV_GAUSSIAN_5x5 );

#ifdef WIN32

cvFlip( temp, temp, 0 );

#endif

cvClearMemStorage( storage );

if( hid_cascade )

{

CvSeq* faces = cvHaarDetectObjects( temp, hid_cascade, storage, 1.2, 2,

CV_HAAR_DO_CANNY_PRUNING );

NumOfHaarFaces = faces->total;

if (NumOfHaarFaces > 0)

{

CvRect* r = (CvRect*)cvGetSeqElem( faces, 0, 0 );

pt1.x = r->x*scale;

pt2.x = (r->x+r->width)*scale;

#ifdef WIN32

pt1.y = img->height - r->y*scale;

pt2.y = img->height - (r->y+r->height)*scale;

#else

pt1.y = r->y*scale;

pt2.y = (r->y+r->height)*scale;

#endif

faceBox->center.x = (float)(pt1.x+pt2.x)/2.0;

faceBox->center.y = (float)(pt1.y+pt2.y)/2;

faceBox->size.width = (float)(pt2.x - pt1.x);

faceBox->size.height = (float)(pt1.y - pt2.y);

}

}

cvShowImage( "Tracking", img );

cvReleaseImage( &temp );

}

CamShift 算法示例代码

// Inputs for CamShift algorithm

IplImage* HUE = cvCreateImage(cvGetSize(SampleForHUE), IPL_DEPTH_8U, 1);

extractHUE(SampleForHUE, HUE); // ** Extract HUE information

int hist_size = 20;

float ranges[] = { 0, 180 };

float* pranges[] = {ranges};

hist = cvCreateHist( 1, &hist_size, CV_HIST_ARRAY, pranges, 1 );

cvCalcHist(&HUE, hist); // Calculate histogram of HUE part

hueFrame = cvCreateImage(cvGetSize(CameraFrame), IPL_DEPTH_8U, 1);

backProject = cvCreateImage(cvGetSize(CameraFrame), IPL_DEPTH_8U, 1);

extractHUE(CameraFrame, hueFrame);

while (trackControl != 0)

{

extractHUE( CameraFrame, hueFrame );

cvCalcBackProject( &hueFrame, backProject, hist ); // Probability is formed

//cvShowImage("Tester2", backProject);

cvCamShift( backProject, searchWin, cvTermCriteria( CV_TERMCRIT_EPS |

CV_TERMCRIT_ITER, 15, 0.1 ), &comp, &faceBox );

searchWin = comp.rect;

}

模板匹配示例代码

// Template Matching for Eye detection

void Face::findEyes_TM(IplImage* faceImage, TrackingSettings* settings)

{

CvSize faceSize; faceSize = cvGetSize(faceImage);

// Load Template from the eye database

CString fileName;

// Name of the template for left eye

fileName.Format("%s\\eye%d.jpg", settings->params->DBdirectory, 0);

IplImage* eyeImage_Left = cvLoadImage(fileName, -1);

// Name of the template for left eye

fileName.Format("%s\\eye%d.jpg", settings->params->DBdirectory, 1);

IplImage* eyeImage_Right = cvLoadImage(fileName, -1);

IplImage* tempTemplateImg_Left; IplImage* tempTemplateImg_Right;

IplImage* templateImg_Left; IplImage* templateImg_Right;

if (eyeImage_Left == NULL || eyeImage_Right == NULL)

{

MessageBox(NULL, "Templates can not be loaded.\n

Please check your eye database folder", "Error", MB_OK||MB_ICONSTOP);

exit(1);

}

else

{

// Change color space according to the settings entered by the user

tempTemplateImg_Left = cvCreateImage(cvGetSize(eyeImage_Left), IPL_DEPTH_8U, 1);

changeColorSpace(settings, eyeImage_Left, tempTemplateImg_Left);

tempTemplateImg_Right =

cvCreateImage(cvGetSize(eyeImage_Right), IPL_DEPTH_8U, 1);

changeColorSpace(settings, eyeImage_Right, tempTemplateImg_Right);

float idealWidth = faceSize.width * settings->params->ratio;

float conversionRatio = idealWidth/(float)tempTemplateImg_Left->width;

CvSize newSize;

newSize.width = (int)idealWidth;

newSize.height = (int)(tempTemplateImg_Left->height*conversionRatio);

templateImg_Left = cvCreateImage(newSize, IPL_DEPTH_8U, 1);

cvResize(tempTemplateImg_Left, templateImg_Left, CV_INTER_LINEAR); // was NN

cvReleaseImage(&eyeImage_Left);

cvReleaseImage(&tempTemplateImg_Left);

templateImg_Right = cvCreateImage(newSize, IPL_DEPTH_8U, 1);

cvResize(tempTemplateImg_Right, templateImg_Right, CV_INTER_LINEAR); // was NN

cvReleaseImage(&eyeImage_Right);

cvReleaseImage(&tempTemplateImg_Right);

}

// *************************************************************

// ************Search faceImage for eyes************************

// *************************************************************

IplImage* GRAYfaceImage = cvCreateImage(faceSize, IPL_DEPTH_8U, 1);

changeColorSpace(settings, faceImage, GRAYfaceImage);

//cvCvtColor( faceImage, GRAYfaceImage, CV_RGB2GRAY);

//GRAYfaceImage->origin = 1;

// ** Warning at this point image origin is bottom-left corner.

// ** Eye1 search area

int x_left = 0;

int y_left = 0;

int width_left = (int)((float)(faceSize.width/2.0));

int height_left = (int)((float)(faceSize.height));

CvRect rect_Eye1 = cvRect(x_left, y_left, width_left, height_left);

CvMat* Eye1Image = cvCreateMat(width_left, height_left, CV_8UC1);

cvGetSubRect(GRAYfaceImage, Eye1Image, rect_Eye1 );

cvFlip( Eye1Image, Eye1Image, 0);

// ** Eye2 search area

int x_right= (int)((float)(faceSize.width/2.0));

int y_right = 0;

int width_right = (int)((float)(faceSize.width/2.0));

int height_right = (int)((float)(faceSize.height));

CvRect rect_Eye2 = cvRect(x_right, y_right, width_right, height_right);

CvMat* Eye2Image = cvCreateMat(width_right, height_right, CV_8UC1);

cvGetSubRect(GRAYfaceImage, Eye2Image, rect_Eye2 );

cvFlip( Eye2Image, Eye2Image, 0);

// OpenCV says that size of the result must be the following:

CvSize size;

size.height= Eye1Image->height - templateImg_Left->height + 1;

size.width = Eye1Image->width - templateImg_Left->width + 1;

IplImage* result1 = cvCreateImage( size,IPL_DEPTH_32F,1);

IplImage* result2 = cvCreateImage( size,IPL_DEPTH_32F,1);

// Left Eye

cvMatchTemplate( Eye1Image, templateImg_Left, result1, settings->params->tempMatch);

// Right Eye

cvMatchTemplate( Eye2Image, templateImg_Right, result2, settings->params->tempMatch);

// find the best match location - LEFT EYE

double minValue1, maxValue1;

CvPoint minLoc1, maxLoc1;

cvMinMaxLoc( result1, &minValue1, &maxValue1, &minLoc1, &maxLoc1 );

cvCircle( result1, maxLoc1, 5, settings->programColors.colors[2], 1 );

// transform point back to original image

maxLoc1.x += templateImg_Left->width / 2;

maxLoc1.y += templateImg_Left->height / 2;

settings->params->eye1.coords.x = maxLoc1.x;

settings->params->eye1.coords.y = maxLoc1.y;

settings->params->eye1.RectSize.width = templateImg_Left->width;

settings->params->eye1.RectSize.height = templateImg_Left->height;

settings->params->eye1.eyefound = true;

// find the best match location - RIGHT EYE

double minValue2, maxValue2;

CvPoint minLoc2, maxLoc2;

cvMinMaxLoc( result2, &minValue2, &maxValue2, &minLoc2, &maxLoc2 );

cvCircle( result2, maxLoc2, 5, settings->programColors.colors[2], 1 );

// transform point back to original image

maxLoc2.x += templateImg_Left->width / 2;

maxLoc2.y += templateImg_Left->height / 2;

settings->params->eye2.coords.x = maxLoc2.x+(int)faceSize.width/2;

settings->params->eye2.coords.y = maxLoc2.y;

settings->params->eye2.RectSize.width = templateImg_Left->width;

settings->params->eye2.RectSize.height = templateImg_Left->height;

settings->params->eye2.eyefound = true;

cvCircle( Eye1Image, maxLoc1, 5, settings->programColors.colors[2], 1 );

cvCircle( Eye2Image, maxLoc2, 5, settings->programColors.colors[2], 1 );

}

自适应 EigenEye 方法示例代码

void Face::findEyes(IplImage* faceImage, TrackingSettings* settings)

{

IplImage** images = (IplImage**)malloc(sizeof(IplImage*)*numOfImages);

IplImage** eigens = (IplImage**)malloc(sizeof(IplImage*)*numOfImages);

IplImage* averageImage;

IplImage* projection;

CvSize faceSize; faceSize = cvGetSize(faceImage);

eigenSize newEigenSize;

newEigenSize.width = faceSize.width * settings->params->ratio;

newEigenSize.conversion = ((float)newEigenSize.width) / ((float)database[0]->width);

newEigenSize.height = ((float)database[0]->height) * newEigenSize.conversion;

CvSize newSize;

newSize.width = (int)newEigenSize.width;

newSize.height = (int)newEigenSize.height;

IplImage* tempImg = cvCreateImage( newSize, IPL_DEPTH_8U, 1);

// **********Initializations**********

for (int i=0; iparams->nImages; i++)

{

images[i] = cvCreateImage(newSize, IPL_DEPTH_8U, 1);

cvResize(database[i], images[i], CV_INTER_LINEAR); // was NN

}

cvShowImage("Eigen", images[0]);

cvReleaseImage(&tempImg);

// Create space for EigenFaces

for (i=0; iparams->nImages; i++)

eigens[i] = cvCreateImage(cvGetSize(images[0]), IPL_DEPTH_32F, 1);

averageImage = cvCreateImage(cvGetSize(images[0]), IPL_DEPTH_32F, 1);

projection = cvCreateImage(cvGetSize(images[0]), IPL_DEPTH_8U, 1);

// *************************************************************

// ************Calculate EigenVectors & EigenValues*************

// *************************************************************

CvTermCriteria criteria;

criteria.type = CV_TERMCRIT_ITER|CV_TERMCRIT_EPS;

criteria.maxIter = 13;

criteria.epsilon = 0.1;

// ** n was present instead of numOfImages

cvCalcEigenObjects( settings->params->nImages, images, eigens,

0, 0, 0, &criteria, averageImage, vals );

// *************************************************************

// ************Search faceImage for eyes************************

// *************************************************************

IplImage* GRAYfaceImage = cvCreateImage(faceSize, IPL_DEPTH_8U, 1);

changeColorSpace(settings,faceImage, GRAYfaceImage);

//cvCvtColor( faceImage, GRAYfaceImage, CV_RGB2GRAY);

// ** Warning at this point image origin is bottom-left corner.

GRAYfaceImage->origin = 1;

int MARGIN = settings->params->MaxError;

double minimum = MARGIN; double distance = MARGIN;

// ** Eye1 search Space

settings->params->eye1.xlimitLeft = 0;

settings->params->eye1.xlimitRight = faceSize.width/2.0 - images[0]->width - 1;

settings->params->eye1.ylimitUp =

(int)( ((float)faceSize.height)*0.75 - images[0]->height - 1);

settings->params->eye1.ylimitDown = faceSize.height/2;

// ** Eye2 search Space

settings->params->eye2.xlimitLeft = faceSize.width/2.0;

settings->params->eye2.xlimitRight = faceSize.width - images[0]->width - 1;

settings->params->eye2.ylimitUp =

(int)( ((float)faceSize.height)*0.75 - images[0]->height - 1);

settings->params->eye2.ylimitDown = faceSize.height/2;

settings->params->eye1.initializeEyeParameters();

settings->params->eye2.initializeEyeParameters();

settings->params->eye1.RectSize.width = images[0]->width;

settings->params->eye1.RectSize.height = images[0]->height;

settings->params->eye2.RectSize.width = images[0]->width;

settings->params->eye2.RectSize.height = images[0]->height;

IplImage* Image2Comp = cvCreateImage(cvGetSize(images[0]), IPL_DEPTH_8U, 1);

int x,y;

// ** Search left eye i.e eye1

for (y=settings->params->eye1.ylimitDown; yparams->eye1.ylimitUp; y+=2)

{

for (x=settings->params->eye1.xlimitLeft; xparams->eye1.xlimitRight; x+=2)

{

cvSetImageROI(GRAYfaceImage, cvRect

(x, y, images[0]->width, images[0]->height));

if (settings->params->varianceCheck == 1 )

{

if (calculateSTD(GRAYfaceImage) <= (double)(settings->params->variance))

{

cvResetImageROI(GRAYfaceImage);

continue;

}

}

cvFlip( GRAYfaceImage, Image2Comp, 0);

cvResetImageROI(GRAYfaceImage);

// Decide whether it is an eye or not

cvEigenDecomposite( Image2Comp, settings->params->nEigens,

eigens, 0, 0, averageImage, weights );

cvEigenProjection( eigens, settings->params->nEigens,

CV_EIGOBJ_NO_CALLBACK, 0, weights, averageImage, projection );

distance = cvNorm(Image2Comp, projection, CV_L2, 0);

if (distance < minimum && distance > 0)

{

settings->params->eye1.eyefound = true;

minimum = distance;

settings->params->eye1.distance = distance;

settings->params->eye1.coords.x = x;

settings->params->eye1.coords.y = y;

}

}

}

minimum = MARGIN; distance = MARGIN;

// ** Search right eye i.e eye2

for (y=settings->params->eye2.ylimitDown; yparams->eye2.ylimitUp; y+=2)

{

for (x=settings->params->eye2.xlimitLeft; xparams->eye2.xlimitRight; x+=2)

{

cvSetImageROI(GRAYfaceImage,

cvRect(x, y, images[0]->width, images[0]->height));

if (settings->params->varianceCheck == 1)

{

if (calculateSTD(GRAYfaceImage) <= (double)(settings->params->variance))

{

cvResetImageROI(GRAYfaceImage);

continue;

}

}

cvFlip( GRAYfaceImage, Image2Comp, 0);

cvResetImageROI(GRAYfaceImage);

// ** Decide whether it is an eye or not

cvEigenDecomposite( Image2Comp, settings->params->nEigens,

eigens, 0, 0, averageImage, weights );

cvEigenProjection( eigens, settings->params->nEigens,

0, 0, weights, averageImage, projection );

distance = cvNorm(Image2Comp, projection, CV_L2, 0);

if (distance < minimum && distance > 0)

{

settings->params->eye2.eyefound = true;

minimum = distance;

settings->params->eye2.distance = distance;

settings->params->eye2.coords.x = x;

settings->params->eye2.coords.y = y;

}

}

}

cvReleaseImage(&Image2Comp);

// ** Cleanup

cvReleaseImage(&GRAYfaceImage);

for (i=0; iparams->nImages; i++)

cvReleaseImage(&images[i]);

for (i=0; iparams->nImages; i++)

cvReleaseImage(&eigens[i]);

cvReleaseImage(&averageImage);

cvReleaseImage(&projection);

free(images);

free(eigens);

}

历史

- v 1.0:

TrackEye的第一个版本 - v 2.0:

TrackEye的第二个版本 TrackEyev2.0 现在支持- 两种不同的人脸检测算法

- Haar 人脸跟踪

- CAMSHIFT

- 两种不同的眼球检测算法

- 自适应主成分分析

- 模板匹配

- 用户可以在流程开始时通过 GUI 选择跟踪算法。

- 可选输入源

- 网络摄像头

- 视频文件

* 请注意,TrackEye是用OpenCV Library v3.1编写的,所以在重新构建时请确保使用它。